cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,974

Perhaps just a "poor person's" perspective, but the "class" of user that can afford $1500 is the same as one that can afford $2500 (to me).

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I think it will be 70% faster, +/- 7% depending on the game.I sure hope this rumored $2500 price for the upcoming RTX-5090 isn't correct?

Said to be 60% to 70% faster than the current RTX-4090;

https://www.pcgamesn.com/nvidia/geforce-rtx-5090-performance

The new tacomas and tundras all sitting on all the dealership lots with over 100 days of inventory. No one is buying them because their price is ridiculous and they have issues. They are also still gas guzzlers while being hilariously overpriced. Unless you NEED a truck like that for work or tow it's a terrible idea. Soon enough they will bring in the Toyota Stout which will have a broader appeal to a majority of the market and it will be much MUCH cheaper and hybrid and save a ton of money on gas likely 40+ mpg. The Maverick is doing very well so Toyota and GMC need to compete. Let's hope they release their variants soon.Seriously! I was thinking about a next gen Toyota 4Runner, until I saw the Tacoma got a $16,000 price hike for the TRD Pro model by going to the new generation. Oh well may just settle for a used one in a few years.

View attachment 654843

View attachment 654844

At least you'll be getting something you know will last multiple decades if you take care of it.Seriously! I was thinking about a next gen Toyota 4Runner, until I saw the Tacoma got a $16,000 price hike for the TRD Pro model by going to the new generation. Oh well may just settle for a used one in a few years.

View attachment 654843

View attachment 654844

Kind of where I'm at, I'll just pretend 3 year old stuff is the latest and greatest, I don't have enough time to game anyway. Especially if prices are going to increase forever. It's just not sustainable unless the average working person is also making that amount more.The new tacomas and tundras all sitting on all the dealership lots with over 100 days of inventory. No one is buying them because their price is ridiculous and they have issues. They are also still gas guzzlers while being hilariously overpriced. Unless you NEED a truck like that for work or tow it's a terrible idea. Soon enough they will bring in the Toyota Stout which will have a broader appeal to a majority of the market and it will be much MUCH cheaper and hybrid and save a ton of money on gas likely 40+ mpg. The Maverick is doing very well so Toyota and GMC need to compete. Let's hope they release their variants soon.

As for 5090 I'm skipping it. I'll get one after it's value depreciates to 600 in 3 years like the 3090s price now. I can wait I have a 4090.

Not true. I saved up to buy a 3090 on launch day. Then I started to save up $50 a month until the 5000 series came out which should put me close to $2500 if it is that price. If you are smart with your money you can afford these type of GPU's.Perhaps just a "poor person's" perspective, but the "class" of user that can afford $1500 is the same as one that can afford $2500 (to me).

How does it goes against the notion that type of people ready to spend $1500 on a gpu would consider a $2500 one if the performance gap is big enough (in a once you go there for the best, you are the I want the best not looking at price tag much perso logic)Not true. I saved up to buy a 3090 on launch day. Then I started to save up $50 a month until the 5000 series came out which should put me close to $2500 if it is that price. If you are smart with your money you can afford these type of GPU's.

At least you'll be getting something you know will last multiple decades if you take care of it.

Not true. I saved up to buy a 3090 on launch day. Then I started to save up $50 a month until the 5000 series came out which should put me close to $2500 if it is that price. If you are smart with your money you can afford these type of GPU's.

If there is a admin fee, it is a bit of semantic.I did the opposite. Instead of saving up $50 a month and then buying a 4090 in one go, I bought a 4090 on a monthly payment plan through Bestbuy with 0% interest. It's really not hard to make the monthly payments at all, just skip out on Starbucks/Fast food/other small expenses that you do not need.

This is my thought process as well. My heavy gaming days are about over and the 4090 will last me a long time.As for 5090 I'm skipping it. I'll get one after it's value depreciates to 600 in 3 years like the 3090s price now. I can wait I have a 4090.

Always good to skip a gen if you can. I’d at least wait for 5090ti variant or refresh if I was on 4090.The new tacomas and tundras all sitting on all the dealership lots with over 100 days of inventory. No one is buying them because their price is ridiculous and they have issues. They are also still gas guzzlers while being hilariously overpriced. Unless you NEED a truck like that for work or tow it's a terrible idea. Soon enough they will bring in the Toyota Stout which will have a broader appeal to a majority of the market and it will be much MUCH cheaper and hybrid and save a ton of money on gas likely 40+ mpg. The Maverick is doing very well so Toyota and GMC need to compete. Let's hope they release their variants soon.

As for 5090 I'm skipping it. I'll get one after it's value depreciates to 600 in 3 years like the 3090s price now. I can wait I have a 4090.

Going from a 4090 to a 5090 Ti is "skipping a gen"?Always good to skip a gen if you can. I’d at least wait for 5090ti variant or refresh if I was on 4090.

The tariffs won't apply since the cards will not be built in China anyway. They currently assemble the 4090 outside due to the ai compute density regulations, and that will be true of the 5090 as well.I was starting to think the MSRP would be the same as last time, however, with the 25% tariffs resuming in June, I think it'll be 1699 + 25% on top brings you to 1699 + 425 = 2124. That being said, my final guess is 2199.

Ah that makes sense, is this true of other cards? How will they handle that disparity I wonder?The tariffs won't apply since the cards will not be built in China anyway. They currently assemble the 4090 outside due to the ai compute density regulations, and that will be true of the 5090 as well.

If the card do scale poorly in the power band like lovelace (we imagine that was discoverd late), could be a good way for Nvidia to save money.Kopite such a leak I think everyone wants smaller and shorter cards and less heat output.

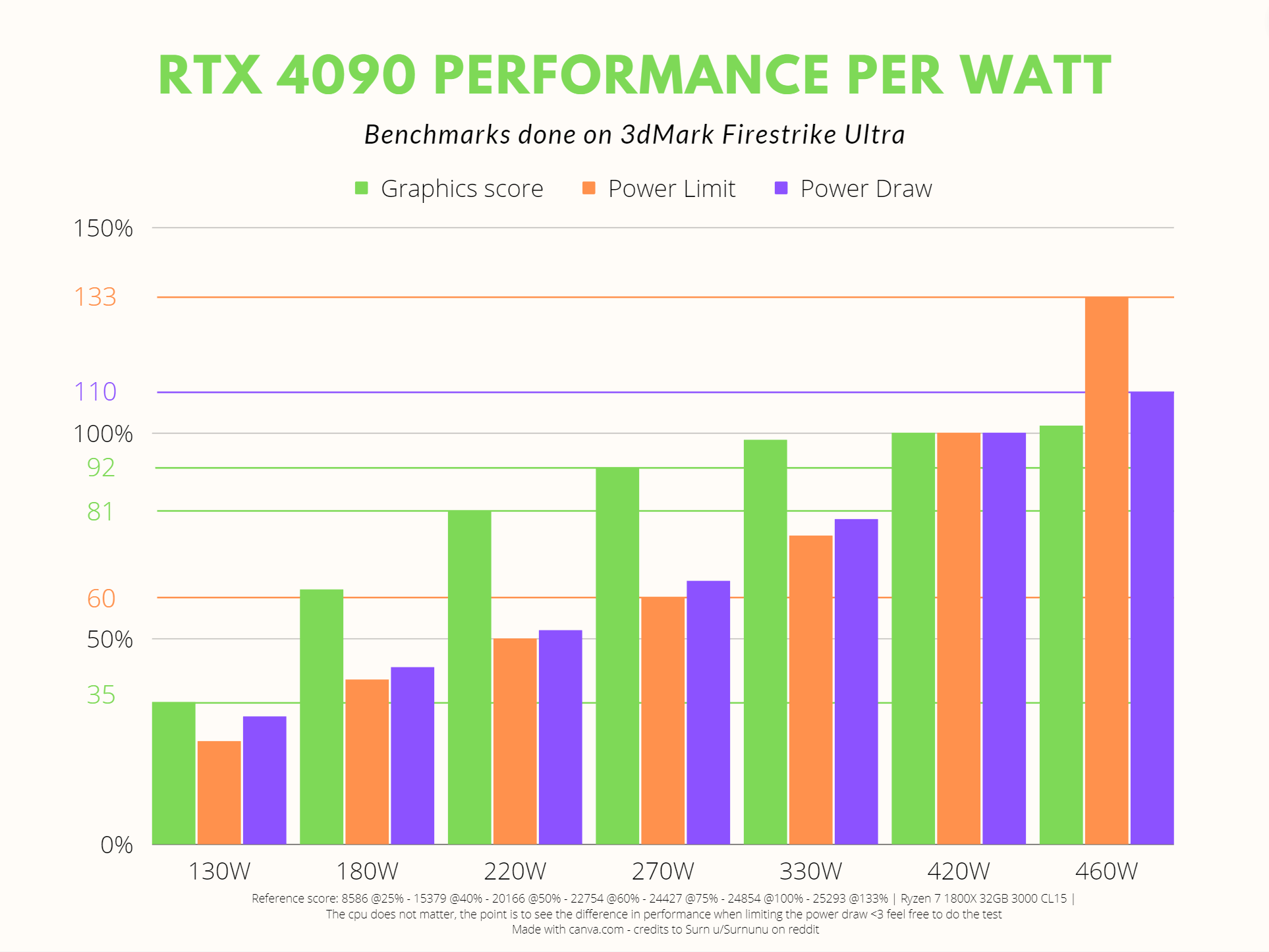

Good chart. Yeah the 4090 is not really starved for power and certainly would have been just fine as a 350W card.If the card do scale poorly in the power band like lovelace (we imagine that was discoverd late), could be a good way for Nvidia to save money.

Cooling get better each generation and would a 350watt 4090 have been much weaker ?

View attachment 656424

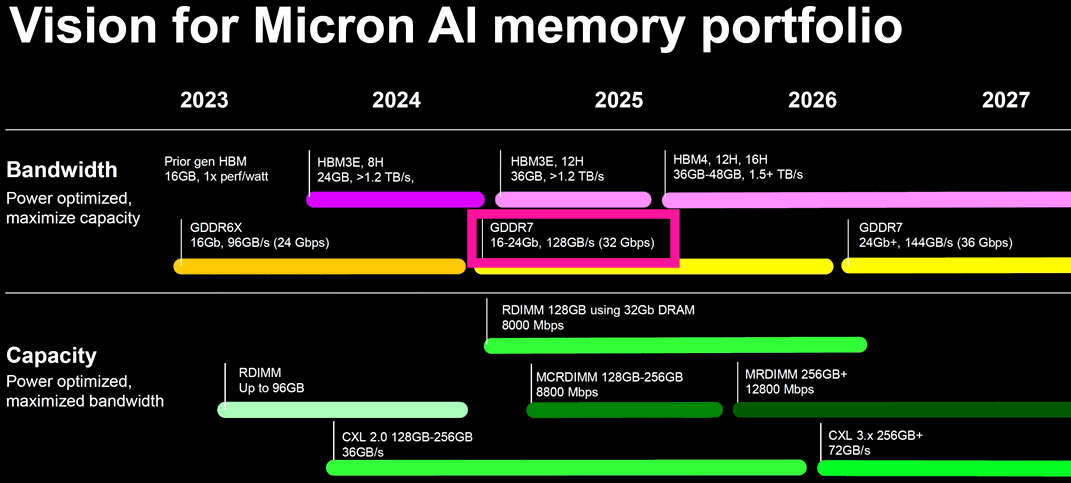

With how much the take can be driven by efficacy in mind and early result GDDR7 could be way more efficiant than GDDR6 let alone the hungry 6X version

Good chart. Yeah the 4090 is not really starved for power and certainly would have been just fine as a 350W card.

Contrast that to Ampere, and my 3080 Ti FE would absolutely love to have more power past its cap.

Considering the power and cooling system, Nvidia themselves could have thought that and been wrong, to latest node process are a bit of magic-alchemy that simulator can be wrong about.I still find it hilarious that prior to the 4090 and RDNA3 coming out, rumors were saying the 4090 might have to "juice up it's power draw" in order to compete with top RDNA3 when in reality juicing up the power draw from 450w to 600w on a 4090 barely gets you anything lmfao. If it couldn't compete at 450w then giving it 33% more power would not have changed anything. I'm seeing my 4090 typically draw only around 360-380 watts in game so yeah a well designed 2 slot cooler probably would have been just fine for it.

Rumors are for more memory module (16 module instead of 12-512 bits, 32 GB) but quite dense configuration, will have to see about that, but yes it should be significantly more efficient. GDDR6 was already 2GB per module, in the future GDDR7 could have 3-4-8GB modules and more flexibility at least that the plan, but first gen should stick to regular 2GB (16Gb):From my understanding the 4090 is memory bandwidth starved, swapping to GDDR 7 and a larger bus should ameliorate that problem. I think the two slot thing might be true because there ought to be fewer memory modules at 2 gb per module right? I think GDDR 7 is also more efficient.

The 4090 performs about 15% less efficiently at 350W compared to 600W in demanding games e.g., Metro Exodus (EE). I hope the 5090 can scale up to 600W for those who want that option. However, if the 5090 has a dual-slot design, it might max out at around 400W, which could still be as effective, depending on efficiency gains from node shrink and the switch to GDDR7. Efficiency improvements, especially in scaling, are likely a focus for NVIDIA, given the demands of the AI sector. I see firsthand how costly it is to operate data centers focused on AI, so improvements in efficiency will likely benefit gaming GPUs as well.If the card do scale poorly in the power band like lovelace (we imagine that was discoverd late), could be a good way for Nvidia to save money.

Cooling get better each generation and would a 350watt 4090 have been much weaker ?

View attachment 656424

With how much the take can be driven by efficacy in mind and early result GDDR7 could be way more efficiant than GDDR6 let alone the hungry 6X version

New rumor seems to indicate the full GB202 wont be used in the 5090. I would imagine the perfect chips they'd like to use for AI, and this will be big enough of an upgrade over the current 4090 to justifying either a similar price or slightly higher. Honestly, if it's the same MSRP with a two slot cooler, I might be in for one. I really unabashedly can't stand Jensen as a person but that'll be a sick card.

https://videocardz.com/newz/nvidia-rtx-5090-new-rumored-specs-28gb-gddr7-and-448-bit-bus

The 4090 isn't the full die, indeed.Isn't this currently the case with the 4090? I'm pretty sure the 4090 isn't actually the full AD102 die

Correct, 4090 is a cutdown AD102.Isn't this currently the case with the 4090? I'm pretty sure the 4090 isn't actually the full AD102 die as they reserve those for AI cards instead. As for knocking down the memory specs a bit, I'm pretty fine with that if it's going to help reduce the power draw like you said because a 448bit bus with 28GB of VRAM is honestly plenty for a new flagship card. The uplift in memory bandwidth over a 4090 is still a whopping 50% higher, and going for the full 512bit bus and 32GB would just add more heat and cost that may not be worth it in the end.