jedihobbit

Gawd

- Joined

- Nov 3, 2005

- Messages

- 963

Trying to stretch a friends system (NF7-S w/ Sempron 3000+ @ 2.4) by up gradeing his aging PNY 6800GT with an AGP version of the X1950 Pro. Originally thought that the extra ½ in length wasnt an issue, card length wasnt but read on

Here is what we got:

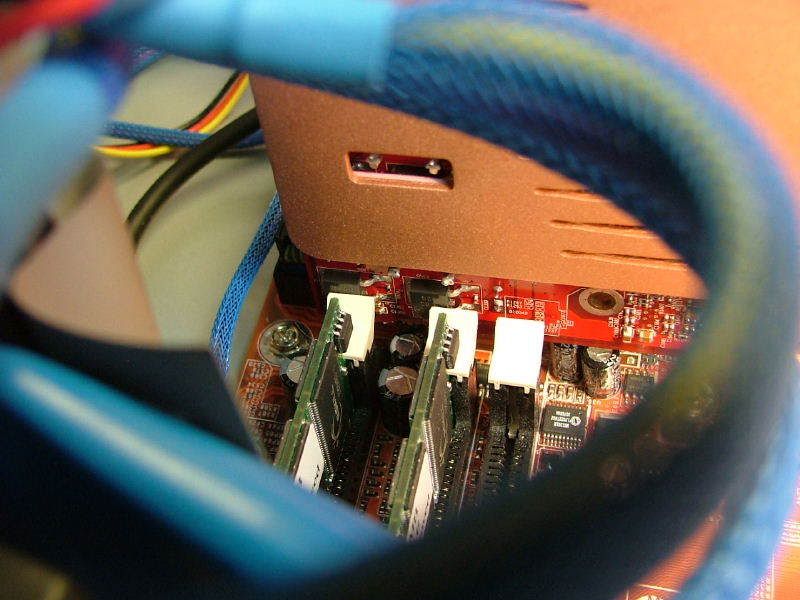

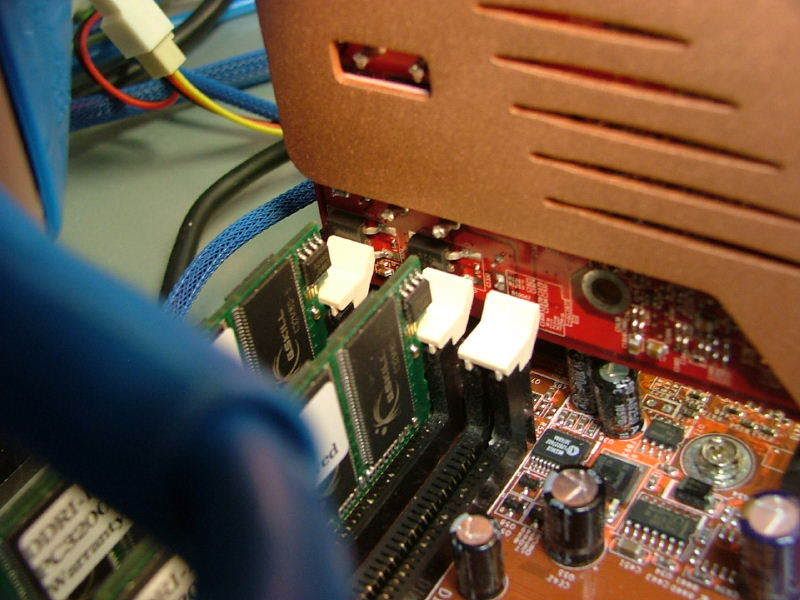

As usual for me the simple in simple upgrade never seems to be there! I guess it is the extra circuitry for AGP but this card has a whole lot of BS at the end that made the fit a real pain.

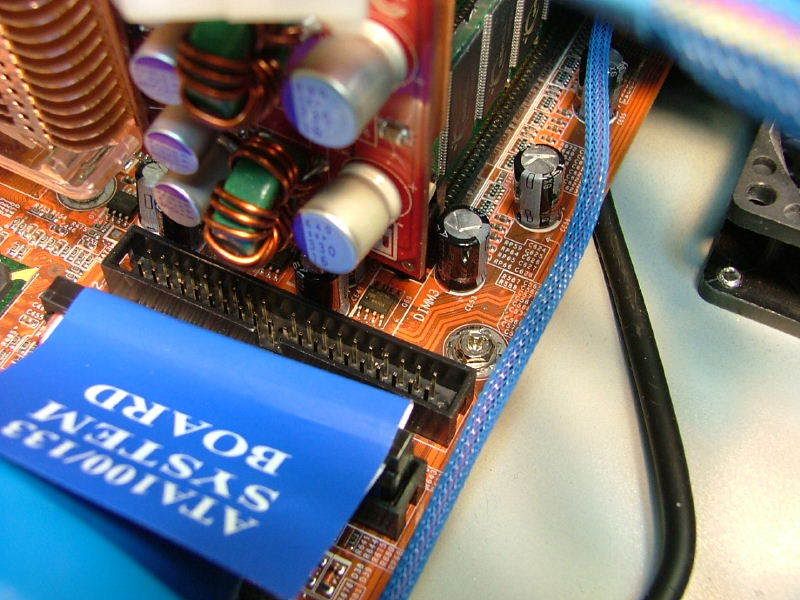

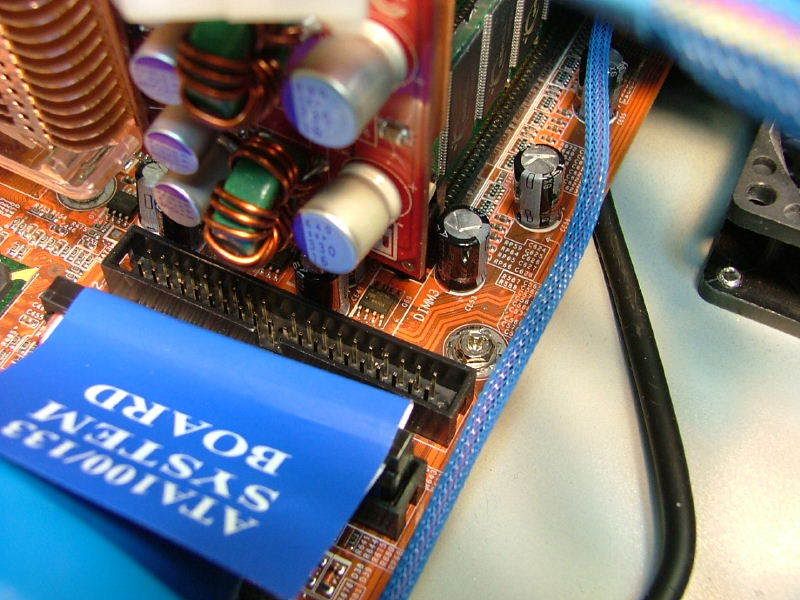

First off the original IDE cable for the optical drives got knocked out of its socket because of being too short with the new card.

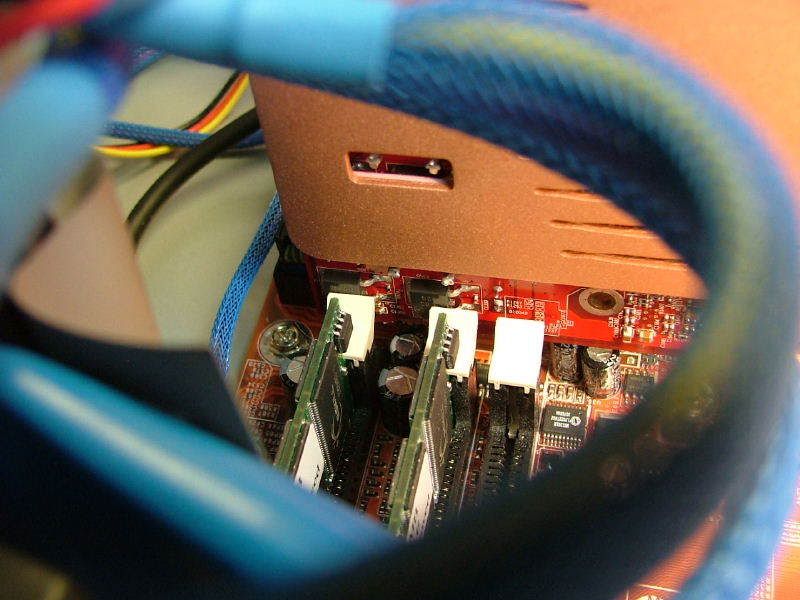

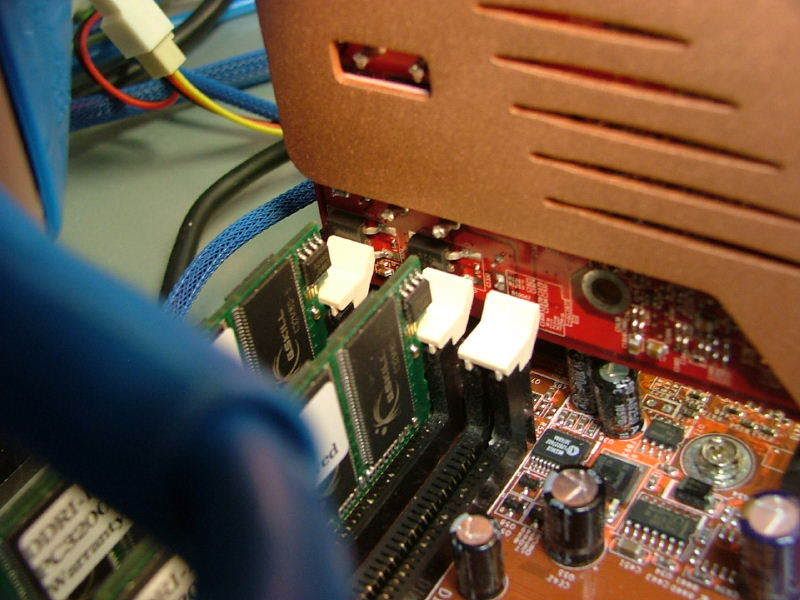

Also something still didnt feel right so I looked on the topside and a couple of small thingies unlatched the last mem card. Have to do a little twist & rotate when seating to avoid snagging the latch.

Luckily I had a longer IDE cable in my Celtic Spirit parts I could raid.

It is in the system running. I believe, Catalyst 7.2 and wondering if maybe I expected too much? It could be what Im seeing is the CPU limits as here is my only reference: PNY 6800GT @ 404/1132 vs. the stock Visiontek X1950 Pro

3Dmarks 03 12221 vs. 13087

3Dmarks 05 5695 vs. 7545

3Dmarks 06 3251 vs. 4332

Dont know, is that a respectable increase for an almost $200.00 outlay? Just dont have a feel, please enlighten me.

Here is what we got:

As usual for me the simple in simple upgrade never seems to be there! I guess it is the extra circuitry for AGP but this card has a whole lot of BS at the end that made the fit a real pain.

First off the original IDE cable for the optical drives got knocked out of its socket because of being too short with the new card.

Also something still didnt feel right so I looked on the topside and a couple of small thingies unlatched the last mem card. Have to do a little twist & rotate when seating to avoid snagging the latch.

Luckily I had a longer IDE cable in my Celtic Spirit parts I could raid.

It is in the system running. I believe, Catalyst 7.2 and wondering if maybe I expected too much? It could be what Im seeing is the CPU limits as here is my only reference: PNY 6800GT @ 404/1132 vs. the stock Visiontek X1950 Pro

3Dmarks 03 12221 vs. 13087

3Dmarks 05 5695 vs. 7545

3Dmarks 06 3251 vs. 4332

Dont know, is that a respectable increase for an almost $200.00 outlay? Just dont have a feel, please enlighten me.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)