MrWizard6600

Supreme [H]ardness

- Joined

- Jan 15, 2006

- Messages

- 5,791

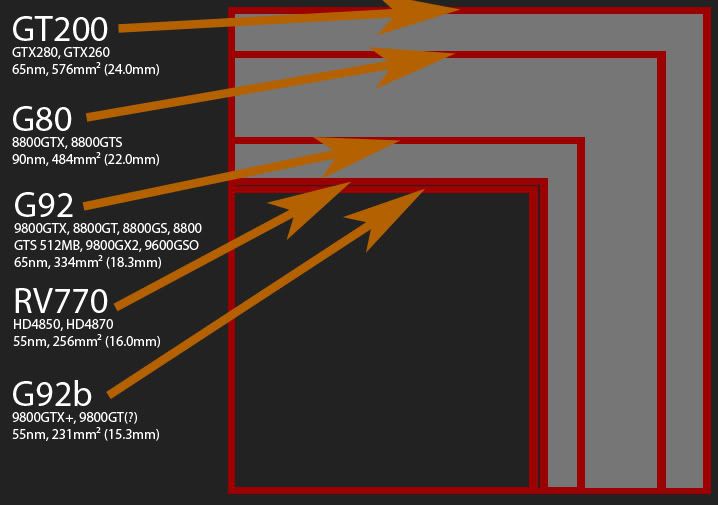

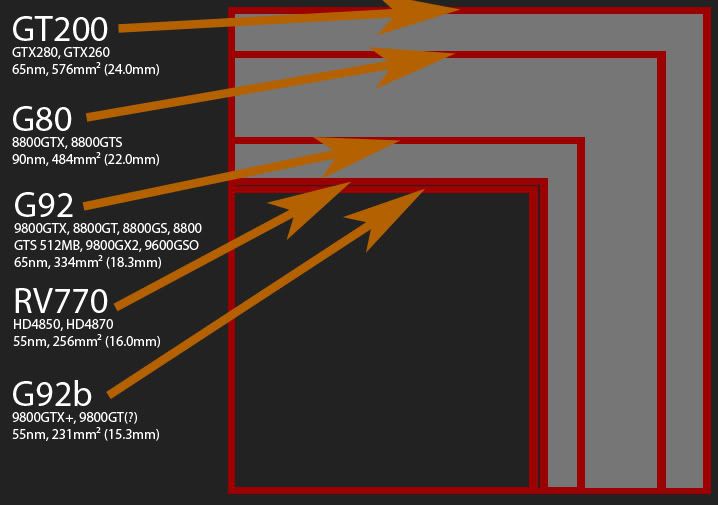

After wondering just who's making more money then who per card I created this little picture to help myself (and anyone else wondering the same thing) understand:

Just to clarify the smallest die is the G92b, represented by the smallest red box there, not the RV770. The RV770 is the box right beside it a mere 4% bigger.

edit: the size of the G92b has fallen into a little bit of contraversy. PC perspective using a ruler says 231mm², users at Byond 3D say 270mm² which is more inline with the estimates that were circulating before the core was released. Either way, we know that the G92b should be very close (or perhalps identical) to the RV770 in terms of production costs.

And my margine for error is resented perfectly by the corners of the die. If I was perfect all four corners should line up in a perfectly straight line (since all the dies are square), since they dont, I made some errors somewhere. I went with numbers given to me online (usually 3 sig figs).

Just to clarify the smallest die is the G92b, represented by the smallest red box there, not the RV770. The RV770 is the box right beside it a mere 4% bigger.

edit: the size of the G92b has fallen into a little bit of contraversy. PC perspective using a ruler says 231mm², users at Byond 3D say 270mm² which is more inline with the estimates that were circulating before the core was released. Either way, we know that the G92b should be very close (or perhalps identical) to the RV770 in terms of production costs.

And my margine for error is resented perfectly by the corners of the die. If I was perfect all four corners should line up in a perfectly straight line (since all the dies are square), since they dont, I made some errors somewhere. I went with numbers given to me online (usually 3 sig figs).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)