I've been wanting to test this out for a while and had some free time this morning. I guess what I'd be looking for from this thread is if we can agree/disagree on the testing method and fix it so we can all agree it's giving us consistent/useful results.

What I've done is:

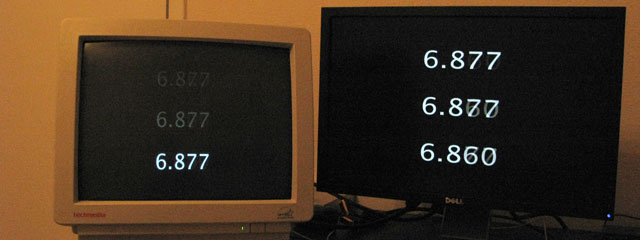

-Use RefreshRateMultiTool

-Windows XP (tried getting my CRT above 85hz in Windows 7 for more than 6hours then gave up)

-AW2310@1920x1080 120HZ

-HP P1130@1024x768 120HZ

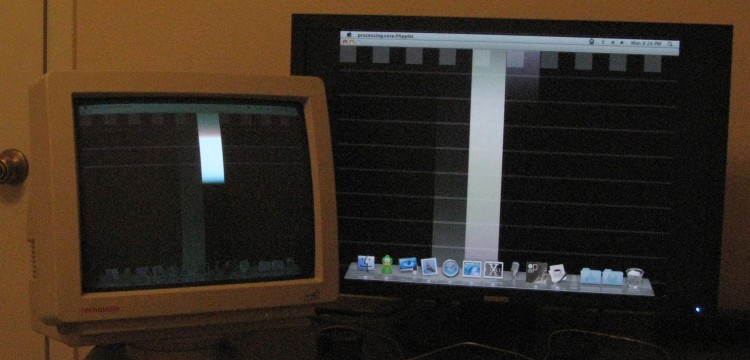

Setup the desktop to have them one on top of the other, stretched the RefreshRateMultiTool vertically so it spans on both screens. Then took some pictures.

From other threads I've read it seems that doing it like this will introduce error between the two screens because of how the video card refreshes.

From what I can tell from the pictures the AW2310 is never more than a Bar behind. You can see it lighting up the pixels (almost like a grayscale bar from black to white) not far behind the CRT.

The one thing I'm not sure about is how on some of the pictures we get some yellow on the LCD. Ambient lights never changed for the duration of the picture taking.

Click HERE for all of the 286 pictures if you want to go through them and give us the analyzed data. (I did not feel like it lol... )

)

What I've done is:

-Use RefreshRateMultiTool

-Windows XP (tried getting my CRT above 85hz in Windows 7 for more than 6hours then gave up)

-AW2310@1920x1080 120HZ

-HP P1130@1024x768 120HZ

Setup the desktop to have them one on top of the other, stretched the RefreshRateMultiTool vertically so it spans on both screens. Then took some pictures.

From other threads I've read it seems that doing it like this will introduce error between the two screens because of how the video card refreshes.

From what I can tell from the pictures the AW2310 is never more than a Bar behind. You can see it lighting up the pixels (almost like a grayscale bar from black to white) not far behind the CRT.

The one thing I'm not sure about is how on some of the pictures we get some yellow on the LCD. Ambient lights never changed for the duration of the picture taking.

Click HERE for all of the 286 pictures if you want to go through them and give us the analyzed data. (I did not feel like it lol...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)