erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,007

Impressive

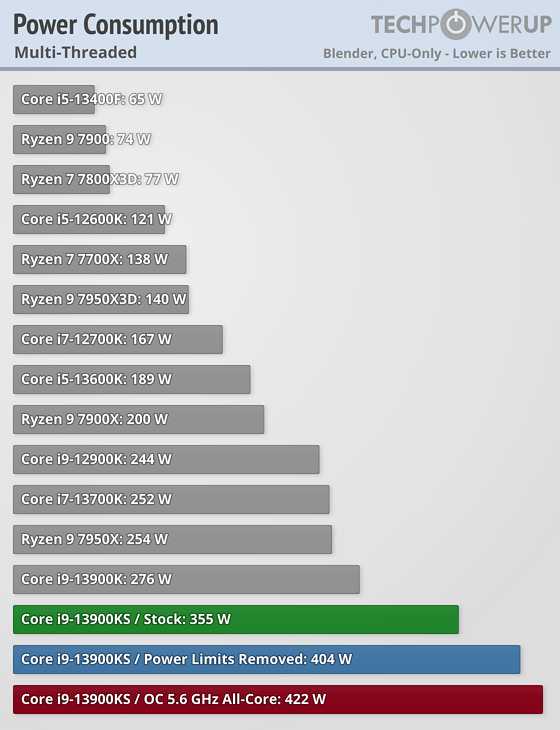

"Cooling such high heat output isn't easy on the CPU cooler either. Moving 350 W from a tiny silicon die, through a thick heatspreader is no easy task. Even with a large AIO sitting on top of the IHS you'll be close to 100°C when heavily loaded. With an air cooler you'll regularly thermal throttle. This is not a huge deal, as modern processors are very good at keeping a certain target temperature by slightly reducing clocks, without performance falling off a cliff—it's still not what you've spent all that money for. No doubt, you can undervolt the 13900KS, and dial the power limits back, but then why buy a KS in the first place? Things aren't much better on the Zen 4 side, because AMD wanted to keep cooler compatibility with Socket AM4, so they had to install an extra thick heatspreader on the AM5 CPUs, which make them difficult to cool, too, but it's easier due to the lower overall heat output.

Although the unlocked multiplier makes overclocking technically easy, it is limited by the cooling system. Even when the thermal limit is raised from 100°C to the maximum of 115°C, it is difficult to push voltage much further, even with an AIO. At least Intel is giving us the option to adjust the temperature limit, AMD has no such feature. Manual overclocking is also complicated by the fact that the low-thread count clocks on two cores are so high (6.0 GHz), while the other cores run at lower speeds. My highest all-core OC was 5.6 GHz, which results in minimal performance gains, because the CPU runs at 5.6 almost all the time anyway at stock. I think it's about time that Intel provided us with better overclocking controls for precision adjustments. Additionally, there is need for an overhaul of Intel XTU, as AMD's Ryzen Master provides a superior user experience. It's high time for Intel to update their boosting technologies as they are still relying on almost decade-old algorithms. In contrast, AMD has been consistently refining their methods every few years and is achieving significant improvements by incorporating more fine-grained mechanisms that consider many more variables on the running system.

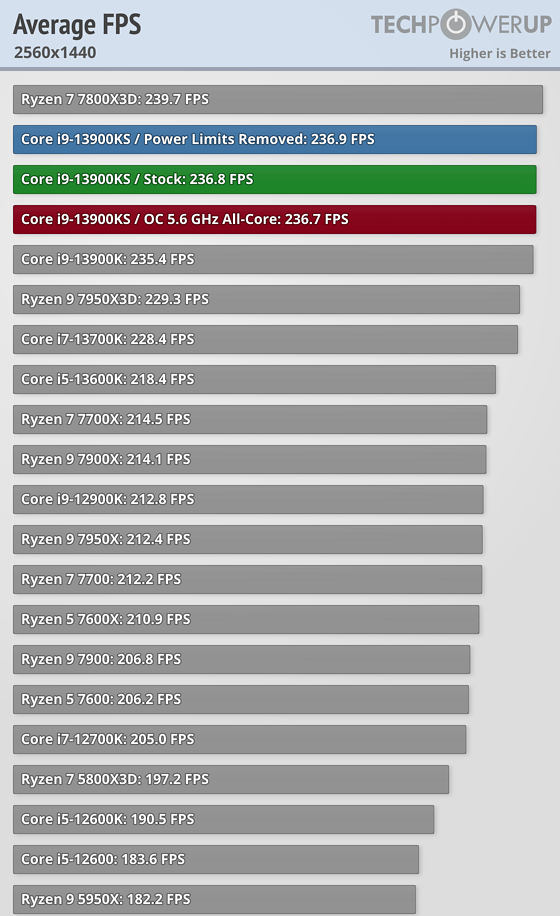

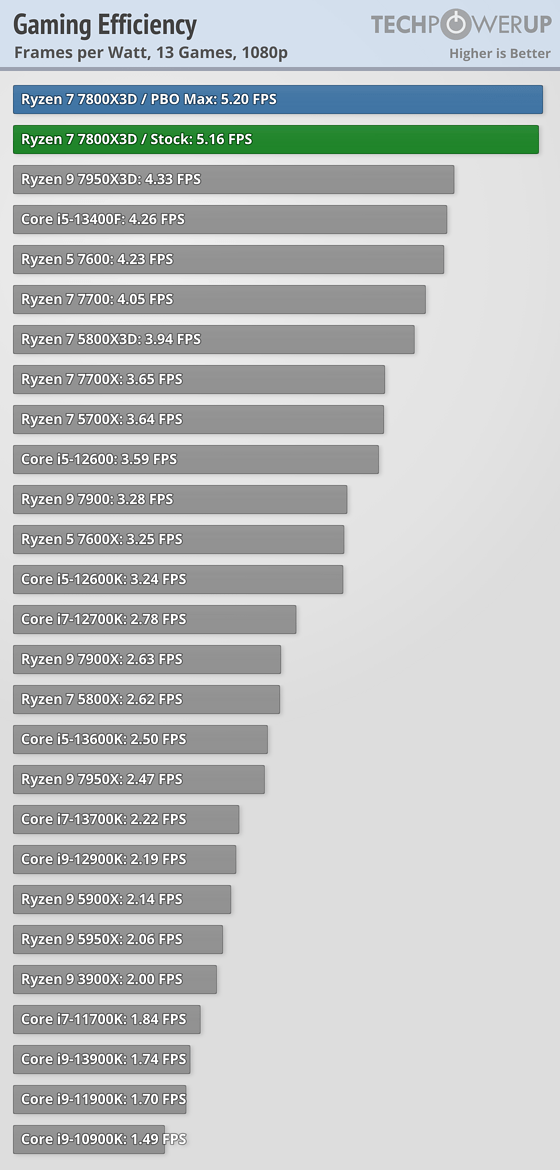

Intel has announced an MSRP of $700 for the 13900KS, which matches the AMD Ryzen 9 7950X3D. Compared to the 13900K, the price increase is $130—for a few percent in performance and some extra power consumption—very hard to justify. On the other hand you're getting a processor that impresses with fantastic performance in all areas—applications and gaming. With the Ryzen offerings you'll have to make more compromises: the 7800X3D is the best processor for gaming, and only $450, but it's a bit slower in applications. The 7950X3D is among the fastest in gaming and applications, but requires custom AMD software for game detection and processor thread management that doesn't always do the right thing. For a more application-focused experience you could opt for the 7950X, but you'll be losing out on a bit of gaming performance due to lack of 3DV cache and the dual CCD design. At the end of the day all these processors are really really good at everything and you'll have a hard time noticing much of a subjective difference. On the other hand, I can imagine a lot of people out there with deep pockets that want "the fastest," especially for content creation, who don't care much about power and also like the bragging rights for having the Limited Edition KS. For the vast majority of gamers and users out there, a 13700K or 7700X would be the much better choice—not much slower, but the motherboard and memory can be bought for the price difference to the 13900KS. Last but not least, 5800X3D is a great gaming option, too. Let's hope that Intel's Meteor Lake can make progress with power efficiency, and then everything else will come together, too—they have the IPC and performance."

Source: https://www.techpowerup.com/forums/threads/intel-core-i9-13900ks.307608/

"Cooling such high heat output isn't easy on the CPU cooler either. Moving 350 W from a tiny silicon die, through a thick heatspreader is no easy task. Even with a large AIO sitting on top of the IHS you'll be close to 100°C when heavily loaded. With an air cooler you'll regularly thermal throttle. This is not a huge deal, as modern processors are very good at keeping a certain target temperature by slightly reducing clocks, without performance falling off a cliff—it's still not what you've spent all that money for. No doubt, you can undervolt the 13900KS, and dial the power limits back, but then why buy a KS in the first place? Things aren't much better on the Zen 4 side, because AMD wanted to keep cooler compatibility with Socket AM4, so they had to install an extra thick heatspreader on the AM5 CPUs, which make them difficult to cool, too, but it's easier due to the lower overall heat output.

Although the unlocked multiplier makes overclocking technically easy, it is limited by the cooling system. Even when the thermal limit is raised from 100°C to the maximum of 115°C, it is difficult to push voltage much further, even with an AIO. At least Intel is giving us the option to adjust the temperature limit, AMD has no such feature. Manual overclocking is also complicated by the fact that the low-thread count clocks on two cores are so high (6.0 GHz), while the other cores run at lower speeds. My highest all-core OC was 5.6 GHz, which results in minimal performance gains, because the CPU runs at 5.6 almost all the time anyway at stock. I think it's about time that Intel provided us with better overclocking controls for precision adjustments. Additionally, there is need for an overhaul of Intel XTU, as AMD's Ryzen Master provides a superior user experience. It's high time for Intel to update their boosting technologies as they are still relying on almost decade-old algorithms. In contrast, AMD has been consistently refining their methods every few years and is achieving significant improvements by incorporating more fine-grained mechanisms that consider many more variables on the running system.

Intel has announced an MSRP of $700 for the 13900KS, which matches the AMD Ryzen 9 7950X3D. Compared to the 13900K, the price increase is $130—for a few percent in performance and some extra power consumption—very hard to justify. On the other hand you're getting a processor that impresses with fantastic performance in all areas—applications and gaming. With the Ryzen offerings you'll have to make more compromises: the 7800X3D is the best processor for gaming, and only $450, but it's a bit slower in applications. The 7950X3D is among the fastest in gaming and applications, but requires custom AMD software for game detection and processor thread management that doesn't always do the right thing. For a more application-focused experience you could opt for the 7950X, but you'll be losing out on a bit of gaming performance due to lack of 3DV cache and the dual CCD design. At the end of the day all these processors are really really good at everything and you'll have a hard time noticing much of a subjective difference. On the other hand, I can imagine a lot of people out there with deep pockets that want "the fastest," especially for content creation, who don't care much about power and also like the bragging rights for having the Limited Edition KS. For the vast majority of gamers and users out there, a 13700K or 7700X would be the much better choice—not much slower, but the motherboard and memory can be bought for the price difference to the 13900KS. Last but not least, 5800X3D is a great gaming option, too. Let's hope that Intel's Meteor Lake can make progress with power efficiency, and then everything else will come together, too—they have the IPC and performance."

Source: https://www.techpowerup.com/forums/threads/intel-core-i9-13900ks.307608/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)