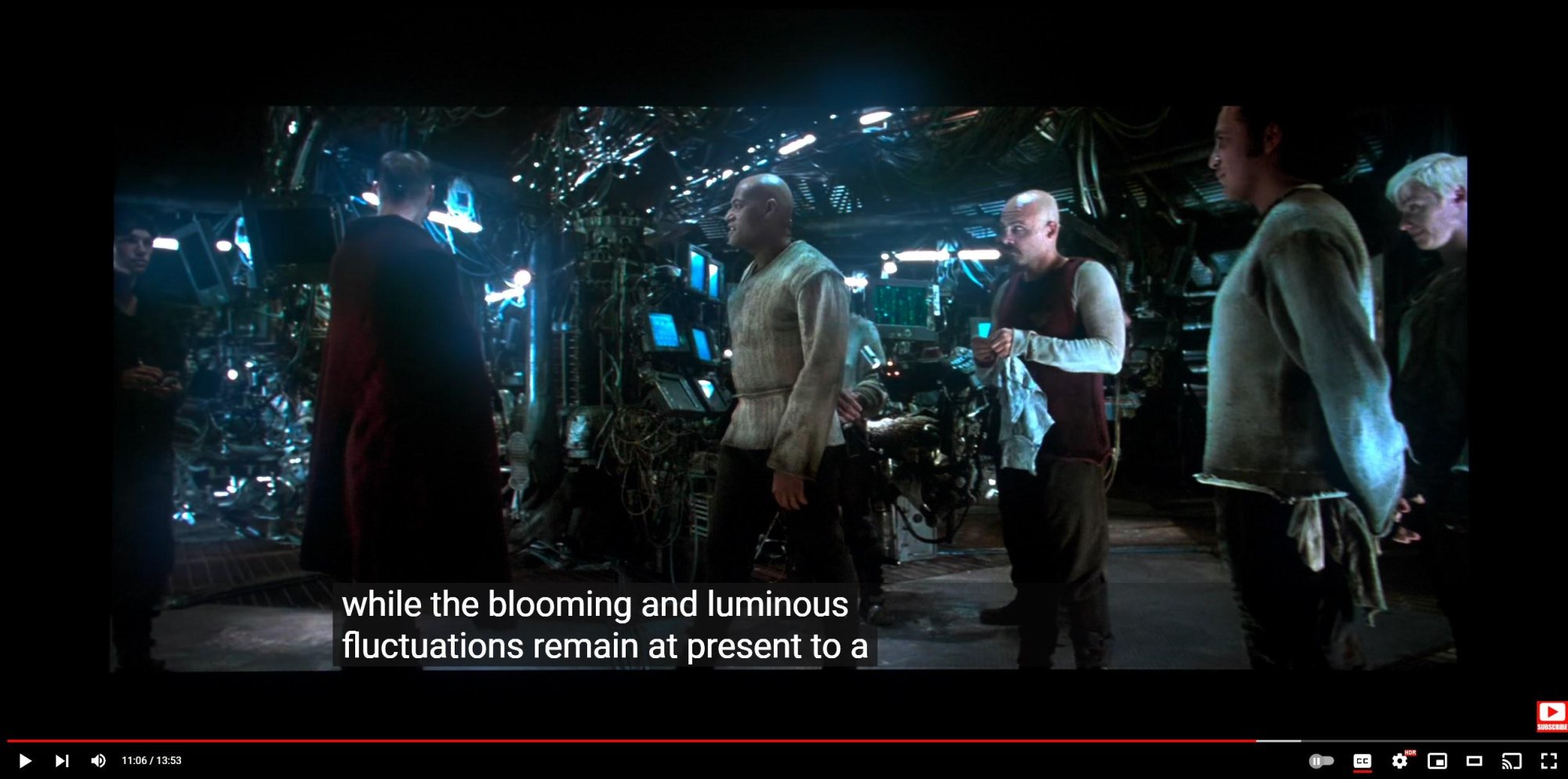

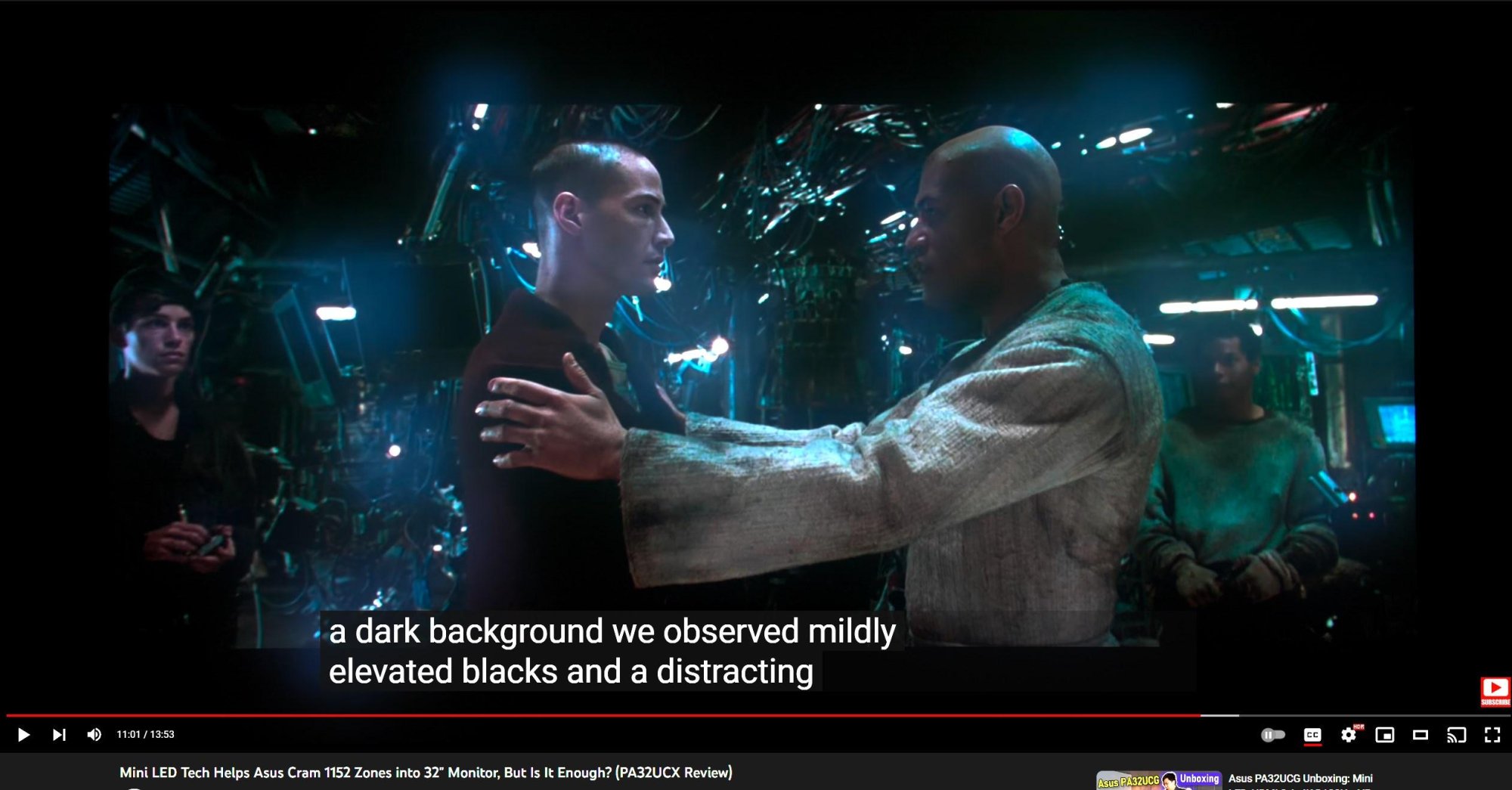

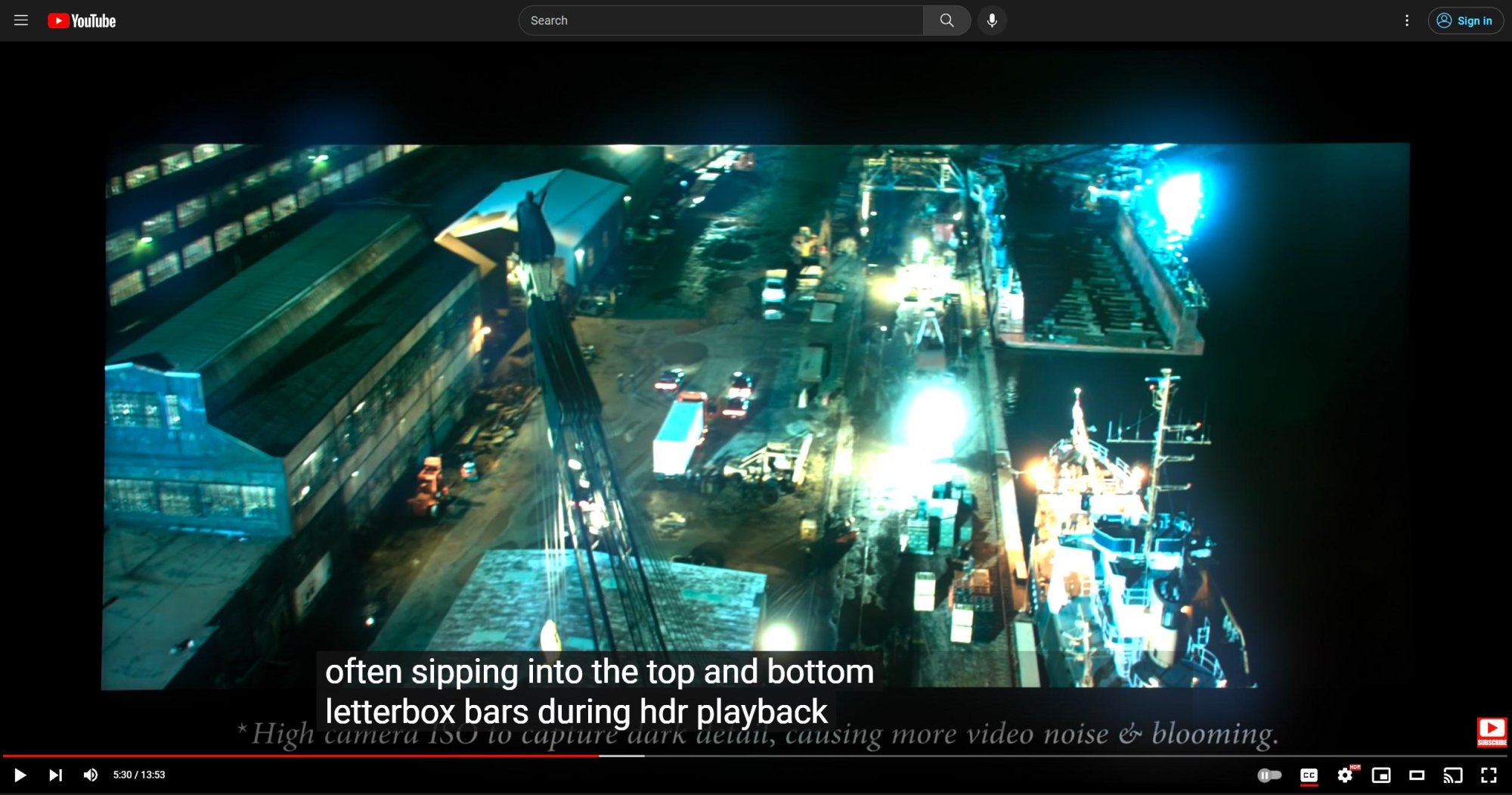

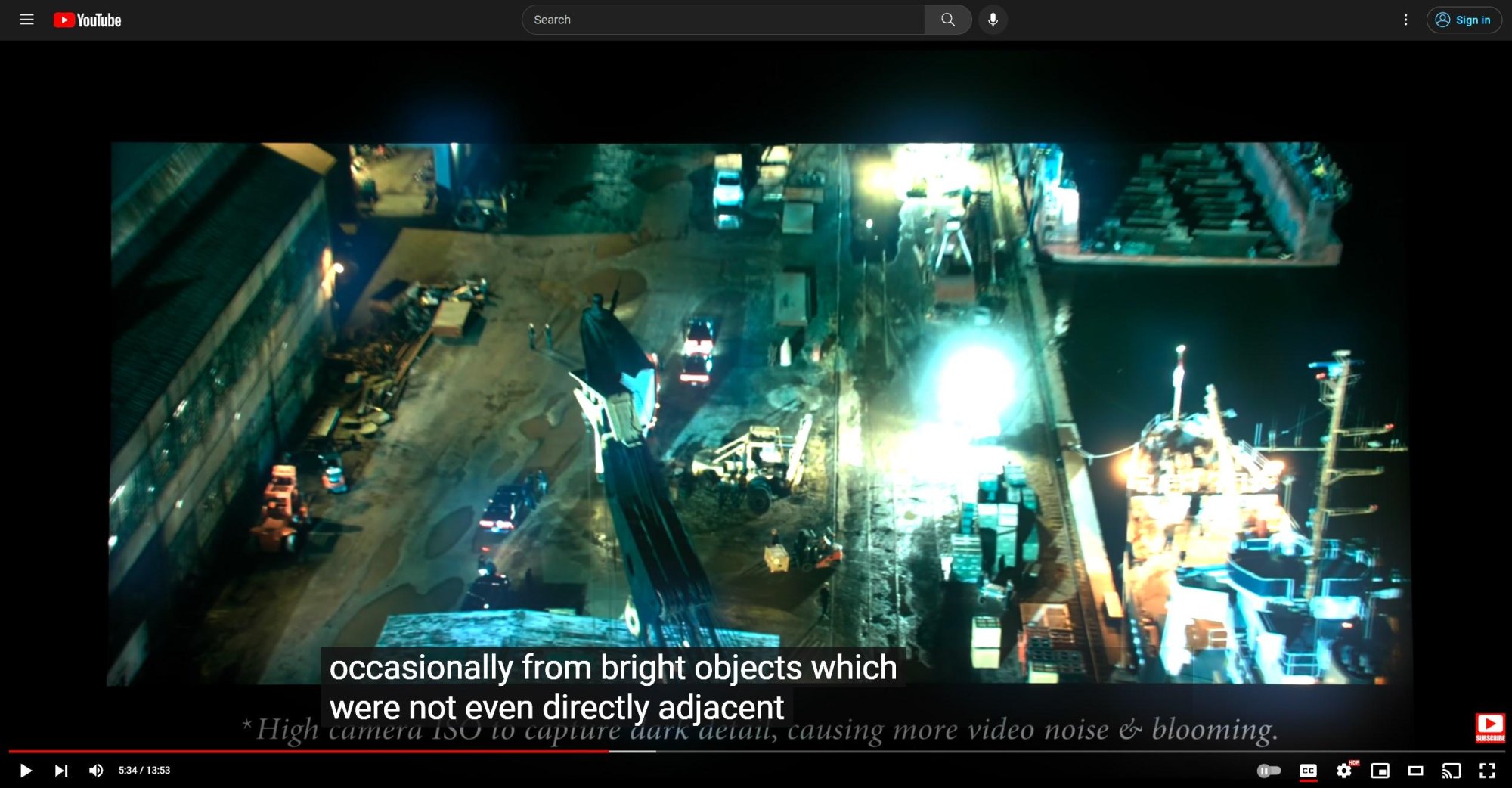

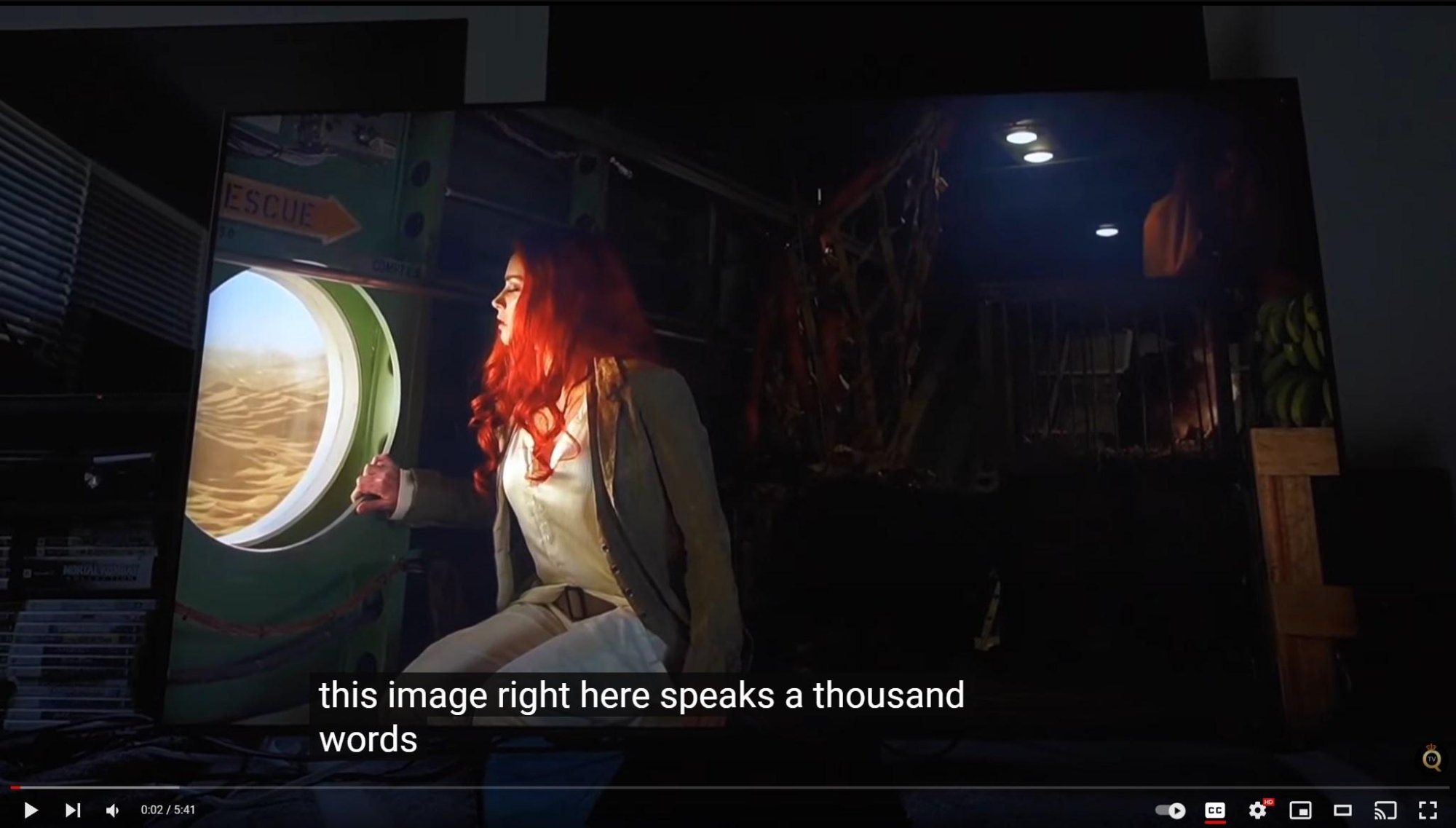

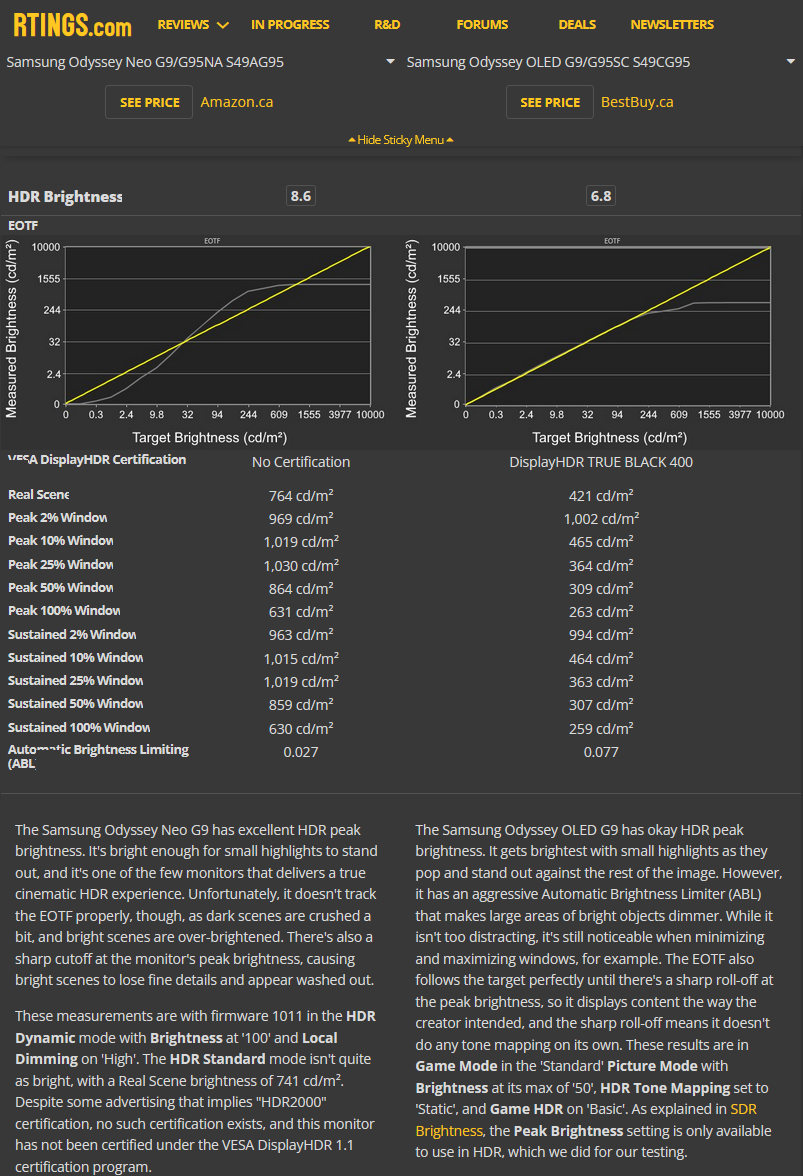

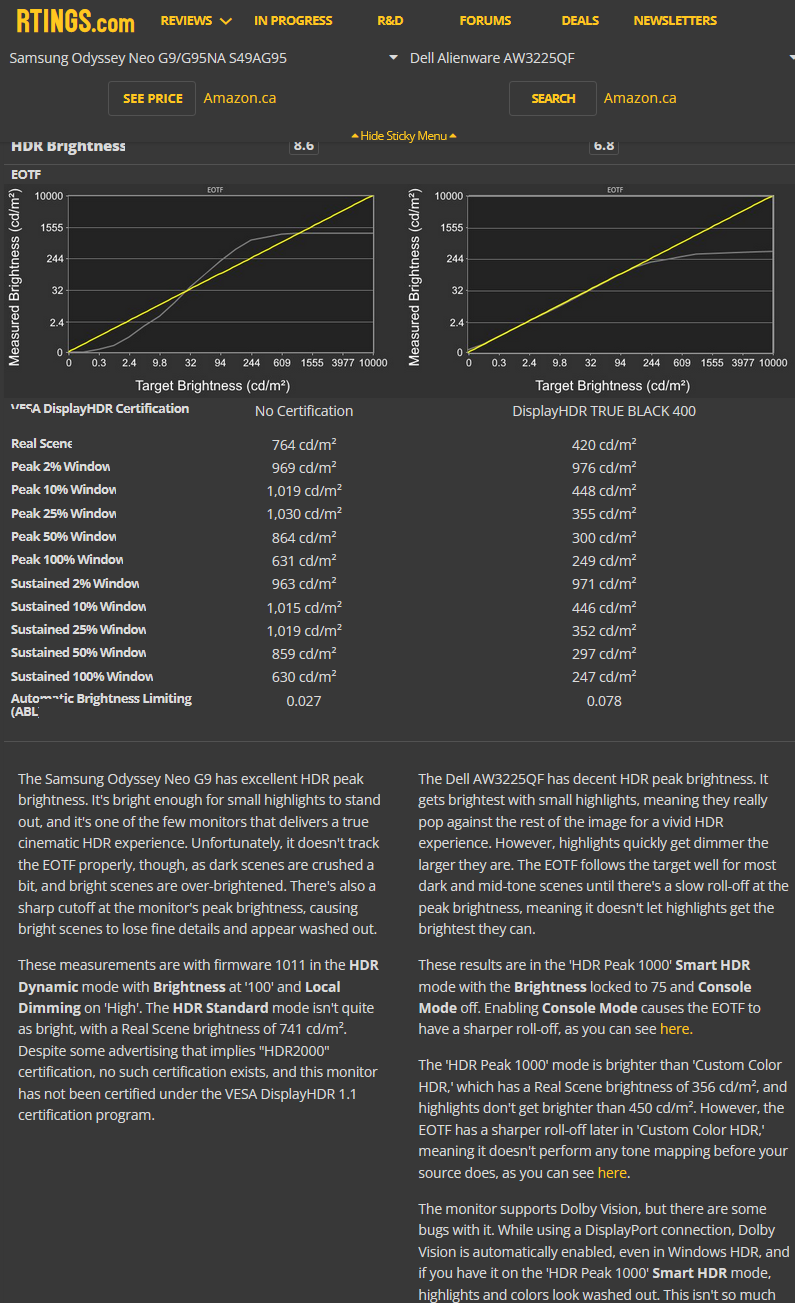

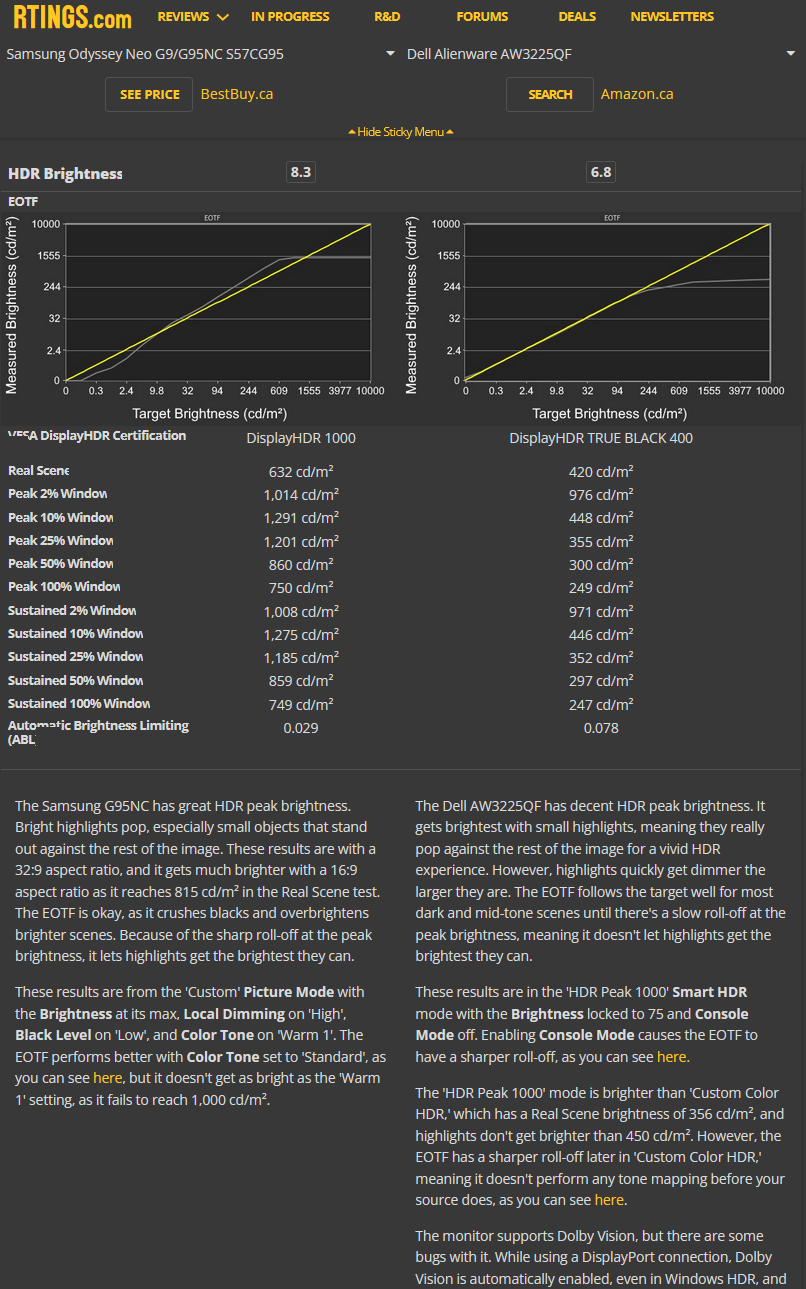

It's not the be-all, end-all... but it does matter to how good HDR looks more than you might think. I was pretty surprised myself. So I have an S95B TV and have since not too long after it came out. That's where I did my HDR gaming. That's about 700-800ish nits real scene brightness. It is really good and I like it... but then I got a PG32UQX monitor. That is 1600-1700nits real scene, and actually pretty much ANY scene as it can pull that even at 50% of screen. Man it looks SO MUCH nicer. Despite not having the pixel perfect dimming and being able to notice the FALD zones sometimes, despite the much lower motion clarity, the impact of HDR games is just way more with that higher brightness.Ah yes. Forgot about brightness. I'm still in SDR land so I don't really care too much about that. Most of my uses don't need HDR but good point.

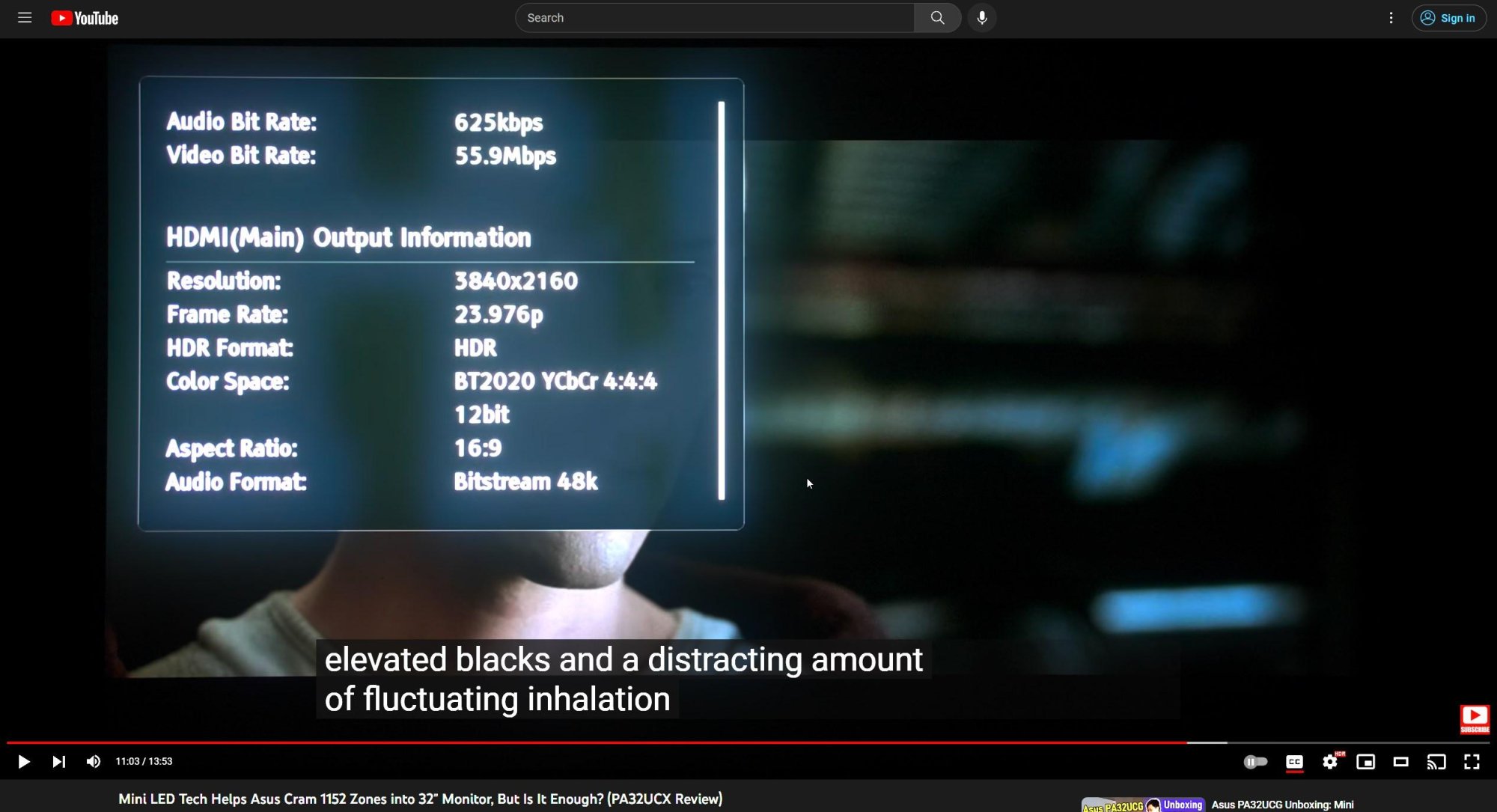

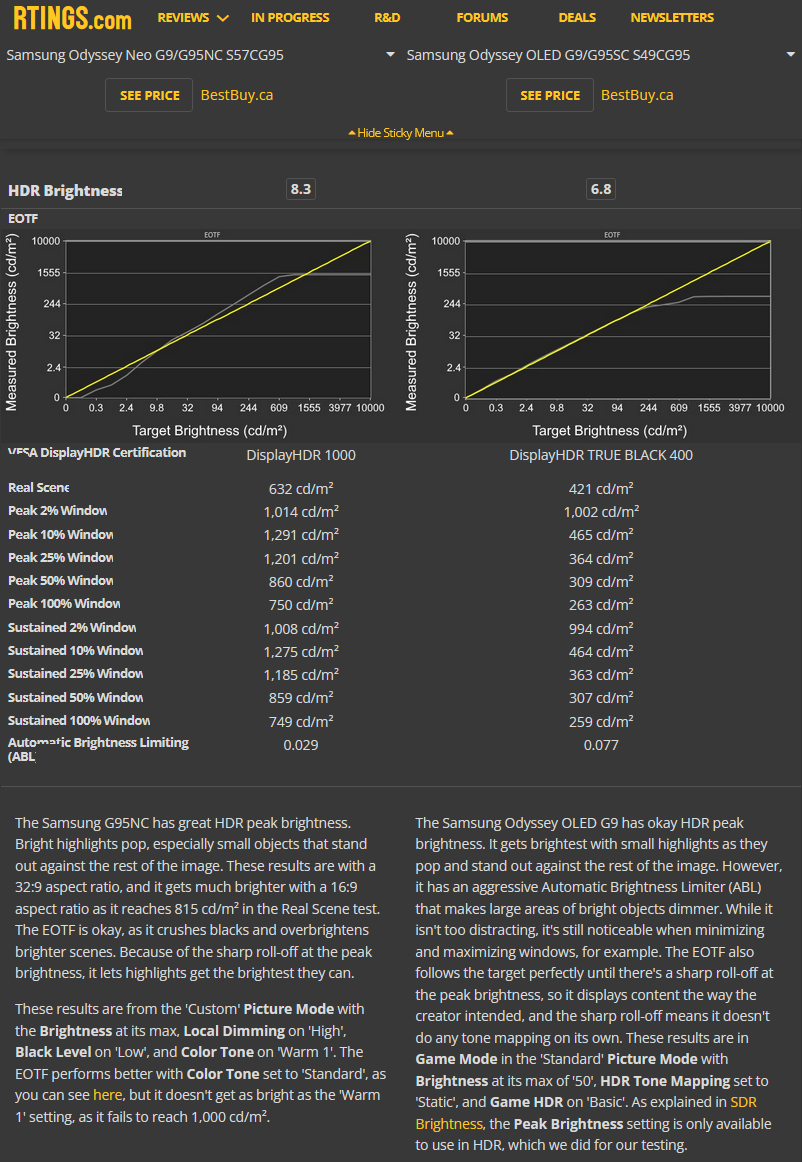

It's still an area that OLED monitors have real trouble in. TVs have always been better and have made more gains, like the S95C which is the successor to my TV can push as high as 1000-1100 real scene, and the LG G3 can do that, maybe even a bit more. But the monitors just can't. They can hit peaks near as high as the TVs, but only at like a 1-2% window, they drop hard (particularly the QD-OLEDs) down to 10% whereas TVs can maintain their brightness at 10%.

That and burn-in are the two areas I'm most hoping to see improvements in OLED monitors. Better text clarity would be nice too, but really that's on MS to quit being sloths about and just get some new anti-aliasing patterns for Windows.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)