Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,955

Not according to Asrock: https://www.asrock.com/mb/AMD/WRX90 WS EVO/index.asp#CPU

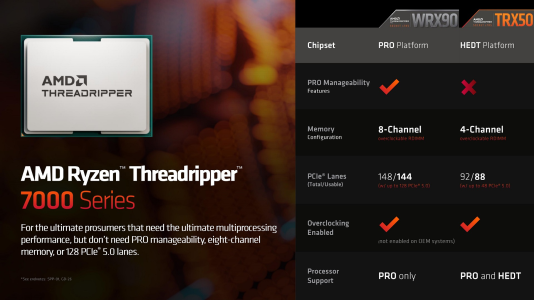

My recollection from Threadripper 7000/TRX50 launch is that non-PRO CPU's can go into Pro motherboards (but get fewer PCIe lanes than the PRO CPU's in the same motherboards), but Pro CPU's cannot go into non-Pro motherboards.

I'm not 100% on this as it has left my active brain L3 cache at this point, but I vaguely remember that being a thing.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)