erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,049

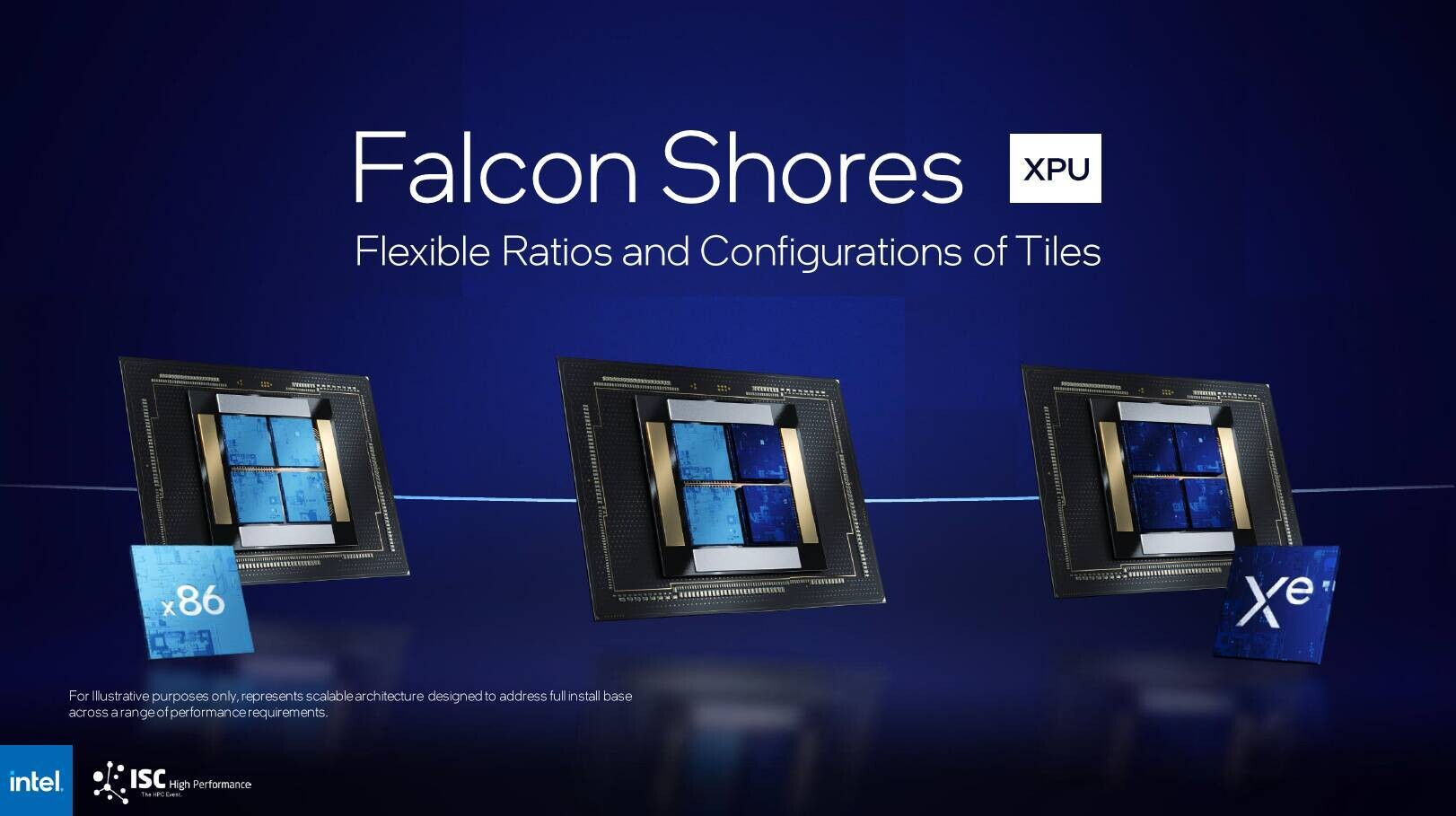

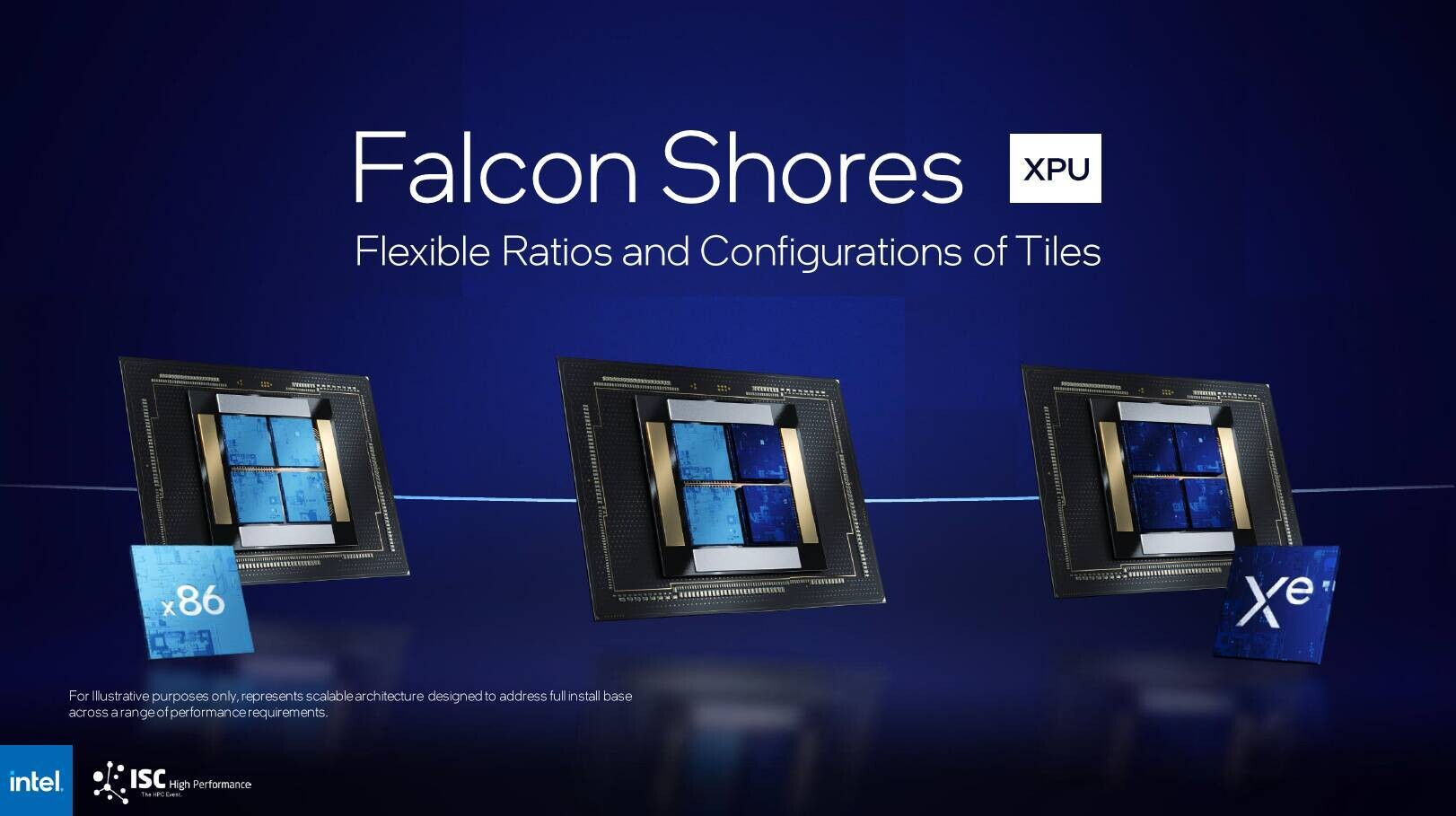

"Intel may need to develop proprietary hardware modules or a new Open Accelerator Module (OAM) spec to support such extreme power levels, as the current OAM 2.0 tops out around 1000 W. Slated for release in 2025, the Falcon Shores GPU will be Intel's GPU IP based on its next-gen Xe graphics architecture. It aims to be a major player in the AI accelerator market, backed by Intel's robust oneAPI software development ecosystem. While the 1500 W power consumption is sure to raise eyebrows, Intel is betting that the Falcon Shores GPU's supposedly impressive performance will make it an enticing option for AI and HPC customers willing to invest in robust cooling infrastructure. The ultra-high-end accelerator market is heating up, and the HPC accelerator market needs a Ponte Vecchio successor."

Source: https://www.techpowerup.com/322592/...-consume-1500-w-no-air-cooled-variant-planned

Source: https://www.techpowerup.com/322592/...-consume-1500-w-no-air-cooled-variant-planned

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)