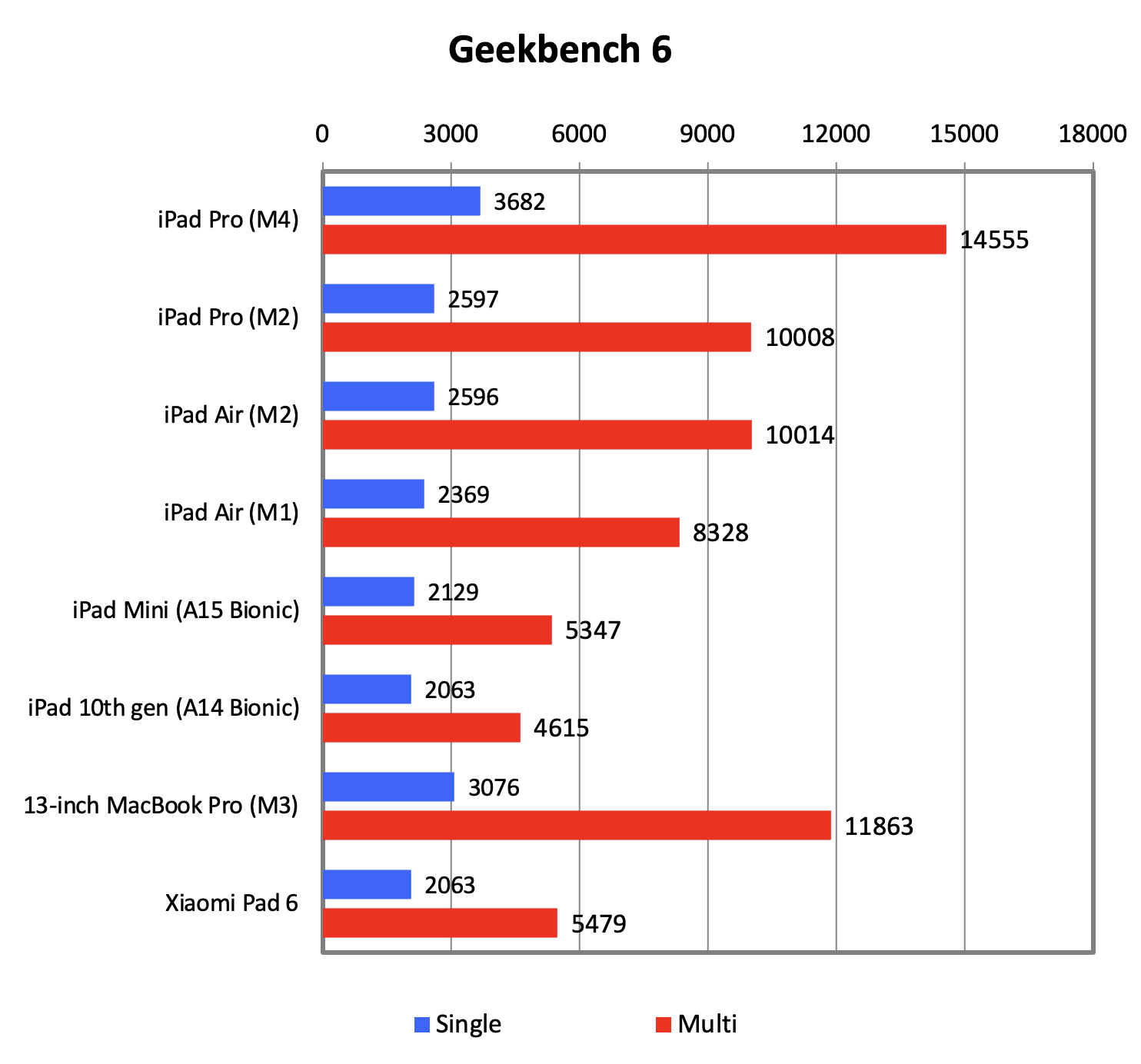

This at first glance look quite impressive ?

+22% from the 13inch laptop form factor in multi thread ?

Like mentionned this i imagine for most only translate in much more battery life (has the Ipad will always just use very little of the chips), which is not useless for an Ipad...

That said it is probably telling that after scrolling fast over 4 reviews, I have seen not a single actual application benchmark (a bit like we often see with new nvme SSDs drive), outside battery life maybe all that extra power feel different when you use it but not something easy to put number on for what people do with them ....

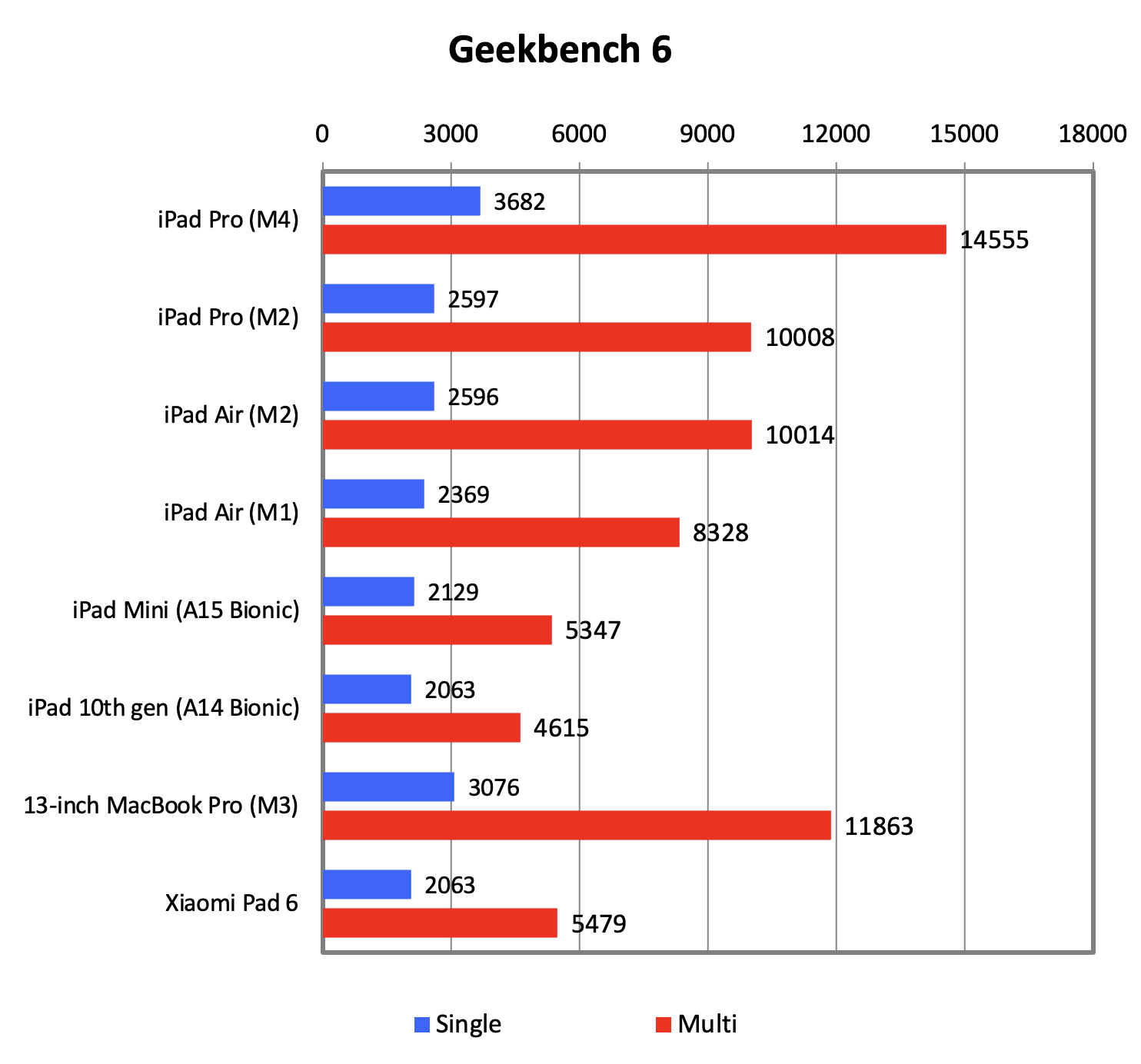

+22% from the 13inch laptop form factor in multi thread ?

Like mentionned this i imagine for most only translate in much more battery life (has the Ipad will always just use very little of the chips), which is not useless for an Ipad...

That said it is probably telling that after scrolling fast over 4 reviews, I have seen not a single actual application benchmark (a bit like we often see with new nvme SSDs drive), outside battery life maybe all that extra power feel different when you use it but not something easy to put number on for what people do with them ....

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)