I'm not sure this one is really predicting the death of x86... as much as the push x86 is likely to get out of specific markets.The difference here is that x86's death has been predicted many times before. Even Intel tried to replace x86 with Titanium, only to find that AMD created 64-bit instructions for x86 and the performance difference was huge.

“When we look at the future roadmaps projected out to mid-2006 and beyond,” Jobs said, “what we see is the PowerPC gives us sort of 15 units of performance per Watt, but the Intel roadmap in the future gives us 70. So this tells us what we have to do.” -- Steve Jobs

"I'm going to destroy Android, because it's a stolen product. I'm willing to go thermonuclear war on this," --Steve Jobs

I think Pat is still of the thinking that if x86 is still the better option for a desktop, or a high performance workstation that means windows stays x86 and holding onto a market like laptop is just a given.

It's not a given. In anyway. M1 should have scared the shit out of Pat but it didn't cause frankly he has his head up Intels (his own) ass. What should have scared him about M1 is that Apple was selling x86 for desktop and ARM for laptops... and it was not big thing. Users don't care. Who cares if Safari on one is compiled for X86 and one is compiled for ARM. It makes no difference to an end user. The thinking 10 years ago was ARM can't ever be a thing because companies aren't going to rewrite all their software for ARM... and if they do then what they going to abandon x86? Its no longer an either or situation. The market can support both side by side no issue.

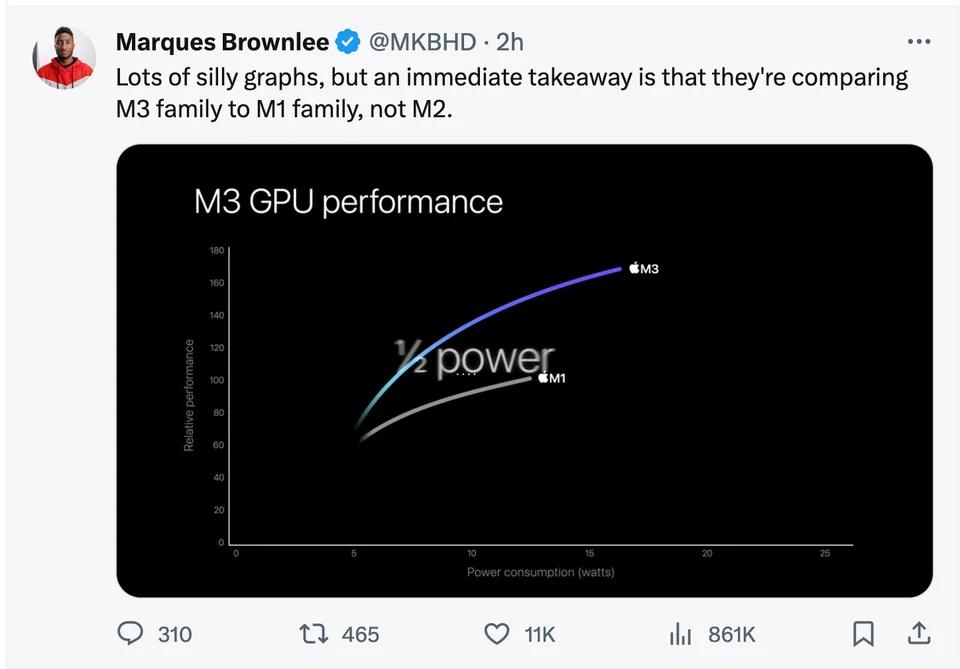

MS is sort of slowly getting it right. We are basically at a point now where if you use the MS tool kits as a developer... the vast majority of devs don't have to do anything special to support ARM. So with a combo of most modern developers building in a way that software can go either way... with more and more users not really caring at all. Intel should be concerned about losing the mobile market completely. Pat is very laissez-faire about the entire thing thinking Windows people still care that they have Intel inside. I suspect in about 5 years Intels market share in the laptop market will drop under 50%. (they are currently at something like 77%). Within a decade they will either have to build their own ARM product or have to live with single digit market share in that market. No matter how much they customize, trim and scrap x86 down it will never match ARM on the same process in terms of efficiency and modularity. ARM as its currently built is just much easier hardware wise to mesh with co processing units like AI even things like Camera chips and basically anything else. Yes you can bolt that stuff onto X86 but not built it to share cache space and resources in the way you can with ARM.

https://learn.microsoft.com/en-us/windows/arm/overview

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)