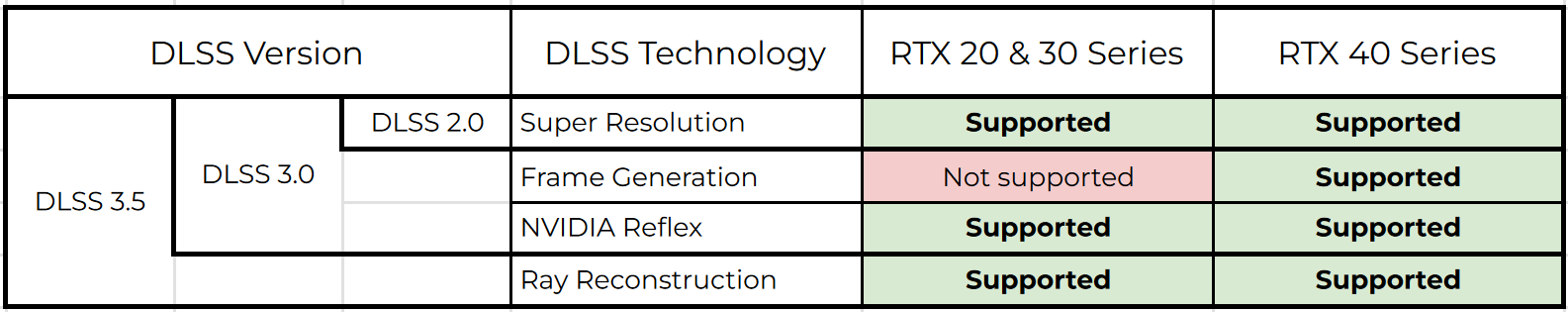

Why do you guys keep bring up AMD (jesus red vs green on the brain)? We are talking about path-tracing and the improvement in DLSS to deal with path tracing. I'm mentioning that path-tracing has downsides. How does this address that?I never claimed they invented the concept, I said they developed new algorithms and processes that were orders of magnitude faster and more efficient than anything anybody else was doing. They hold the patents on those algorithms and processes, those patents are what primarily give them the edge they have over AMD or Intel when it comes to ray tracing performance. At this time, Nvidia's algorithms allow them to be something like 30% faster than AMD transistor for transistor when it comes to accelerating ray tracing effects, which is a huge leap and why Nvidia manages to stay consistently ahead of AMD who has to try and replicate the functionality while dancing around patents.

Reyes rendering was created in the 1980s and is NOT unique to Nvida. Renderman was created in 1993 and used Reyes rendering THEN, and it predates ANY work that nVidia has done on the topic. Reyes rendering was completely dropped from Renderman in 2016. Most likely RIS was used for Finding Dory which doesn't include Reyes Rendering at all. Bolting Nvidia patents on speeding up Reyes is crazy to attribute to Renderman.Finding Dory, Cars 3, and Coco, were all rendered using Pixar's RenderMan and their supercomputer (currently ranked 25'th fastest), and they are entirely Path Traced, and it uses brute force to implement the entirety of the Reyes algorithms, a topic Nvidia has been consistently researching, publishing, and patenting, going back to at least 2005

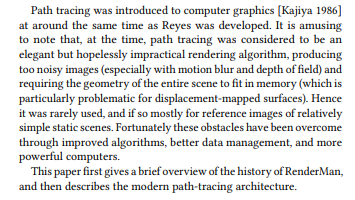

Here is a link to one of their many such early works on the topic.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)