mzs_biteme

[H]ard|Gawd

- Joined

- Nov 7, 2001

- Messages

- 1,595

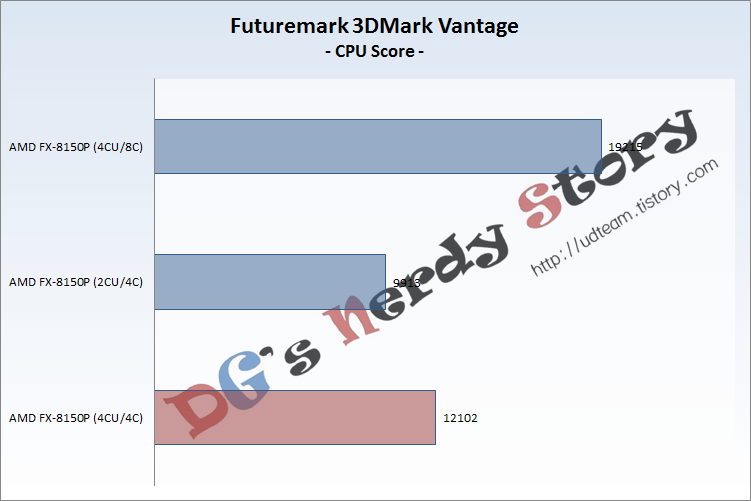

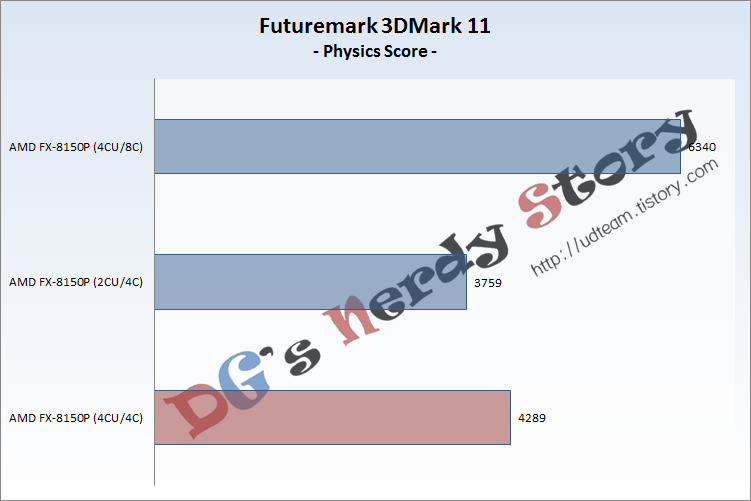

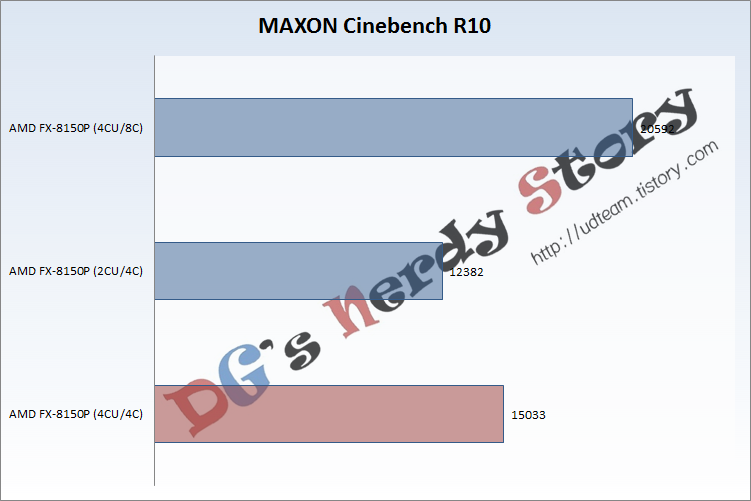

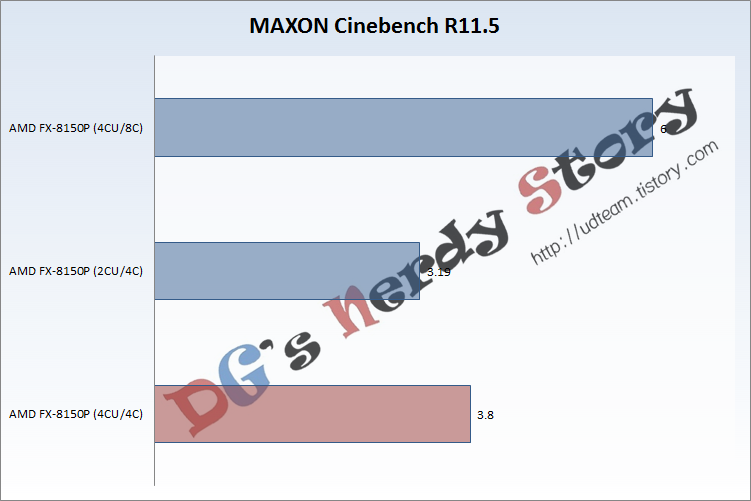

Hmm, something about cache thrashing... >>> XF link is dead, click here <<<

As bad is BD looks right now, I still think there is a LOT to discover and improve on with this new arch.... Nothing is over 'till it's over...

As bad is BD looks right now, I still think there is a LOT to discover and improve on with this new arch.... Nothing is over 'till it's over...

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)