More rumors:

http://forum.donanimhaber.com/m_31456023/mpage_1/key_//tm.htm#31456023

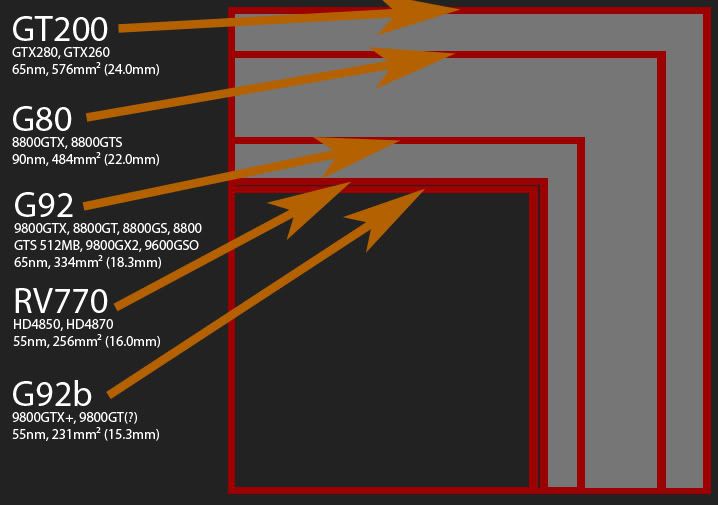

This coupled with the 300mm2 sized chip, suggests that an awful amount of work had to be done to the RV770 architecture, in order to include DX11 features.

No word on transistor count yet.

http://forum.donanimhaber.com/m_31456023/mpage_1/key_//tm.htm#31456023

This coupled with the 300mm2 sized chip, suggests that an awful amount of work had to be done to the RV770 architecture, in order to include DX11 features.

No word on transistor count yet.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)