erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,929

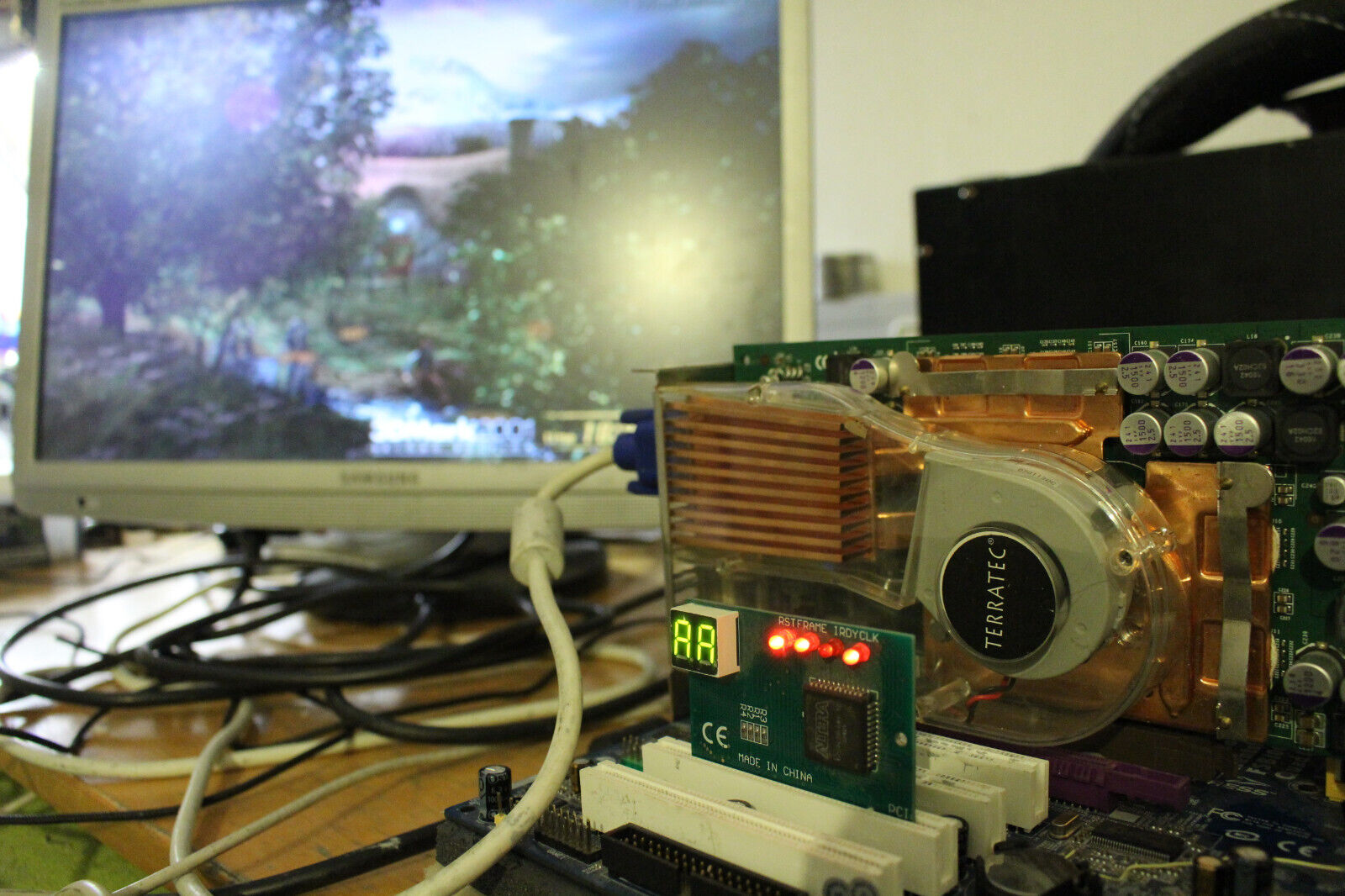

nVidia GeForce FX 5800 Ultra

“Bizarrely, GeForce FX 5800 Ultra cards now fetch decent prices among collectors, thanks to their rarity and the story that surrounded them at the time. If you were unlucky enough to buy one and still have it lurking in a drawer, it might be worth sticking it on eBay.

We hope you’ve enjoyed this personal retrospective about GeForce FX. For more articles about the PC’s vintage history, check out our retro tech page, as well as our guide on how to build a retro gaming PC, where we take you through the trials and tribulations of working with archaic PC gear.

We would like to say a big thank you to Dmitriy ‘H_Rush’ who very kindly shared these fantastic photos of a Gainward GeForce FX 5800 Ultra with us for this feature. You can visit vccollect to see more of his extensive graphics card collection.”

View: https://youtu.be/LVEOL4BYqcQ?si=uuJJWpS8nn55jkra

Source: https://www.pcgamesn.com/nvidia/geforce-fx

“Bizarrely, GeForce FX 5800 Ultra cards now fetch decent prices among collectors, thanks to their rarity and the story that surrounded them at the time. If you were unlucky enough to buy one and still have it lurking in a drawer, it might be worth sticking it on eBay.

We hope you’ve enjoyed this personal retrospective about GeForce FX. For more articles about the PC’s vintage history, check out our retro tech page, as well as our guide on how to build a retro gaming PC, where we take you through the trials and tribulations of working with archaic PC gear.

We would like to say a big thank you to Dmitriy ‘H_Rush’ who very kindly shared these fantastic photos of a Gainward GeForce FX 5800 Ultra with us for this feature. You can visit vccollect to see more of his extensive graphics card collection.”

View: https://youtu.be/LVEOL4BYqcQ?si=uuJJWpS8nn55jkra

Source: https://www.pcgamesn.com/nvidia/geforce-fx

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)