elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,322

Or on the flip side, it ends up being something almost no company implements just like a lot of features. We still barely have any physics based simulations in most games, it's messed up that the most advanced physics based gameplay is in Tears of the Kingdom on the Nintendo Switch. AI has huge potential but whether companies start utilizing it after the hype dies in a few years is another question. I expect it will be more of a thing in the content creation than it is in gameplay.

Knowing TV companies, it's either disabled for game mode, or it's on for everything and causes higher input lag because lag is not really a concern for movies and TV.

This sort of tech might also work best when paired with either streaming apps as an API or middleware layer, or BluRay players where you could analyze both past and following frames to figure out what to do with the current frame, and to also do the processing ahead of time before the viewer sees it. E.g a streaming service has streamed 25% of a film when it starts playing it, then there's a ton of footage the processor could handle in the background and then just present the enhanced resulting frames. This would remove any realtime demands for it.

I don't know if that will be true on this model. I think tv's default upscaling is being replaced by AI. So if the default upscaling of the tv in game mode is better than the traditional non-AI upscaling methods, even obviously not as good as the full featured media processing, then it will be a gain and a win. Just like you turn a lot processing off in game mode on regular tvs, but you are still able to upscale (in fact, upscaling of 4k is forced on samsung 8k tvs, there is no 1:1 pixel letterboxed 4k signal capability).

. . . .

The thing is, there are bandwidth limitations even using DSC (display stream compression) - so getting a high hz signal, for example a 240hz 4k 10bit 444(rgb) HDR signal to the tv first over the existing port and cable limitations using DSC, and THEN using hardware on the TV to upscale could be more efficient and get higher performing results than sending a pre-baked (4k upscaled to) 8k signal from the nvidia gpu at lower hz. Unless they started putting nvidia upscaling hardware on gaming monitors/tvs someday perhaps.

So for pc gaming on a gaming tv, putting AI upscaling hardware on the tv sounds like it could be very useful if done right.

I use a 2019 shield regularly which has a 2018 AI upscaling chip in it, but that's not the same as having a more modern AI chip in the display itself, due to the bandwidth limitations of ports and cables in regard to PC gaming on a gaming TV. More modern AI upscaling may be faster, cleaner, and provide more detail as the generations progress.

Also, in regard to media, the shield does an ok job to 4k but it is a big leap to do 8k fast enough, clean enough, and with high enough detail gained.

For PC gaming, the bandwidth savings by upscaling on the display side would be important, especially with the way nvidia has allotted bandwidth on their ports up until now, (60hz 8k max, 7680x2160 at 120hz max, limits on multiple 240hz 4k screens) - though that could change to where a single port could get full hdmi 2.1 bandwidth with the 5000 series potentially, I hope so at least.

If it's better than tv's traditional non-AI upscaling overall, then it's a win. Especially 4k to 8k local content, including 4k resolution output gaming, (and even variable bit rate "4k" streaming stuff) - where it's working from fairly high detail to start with.

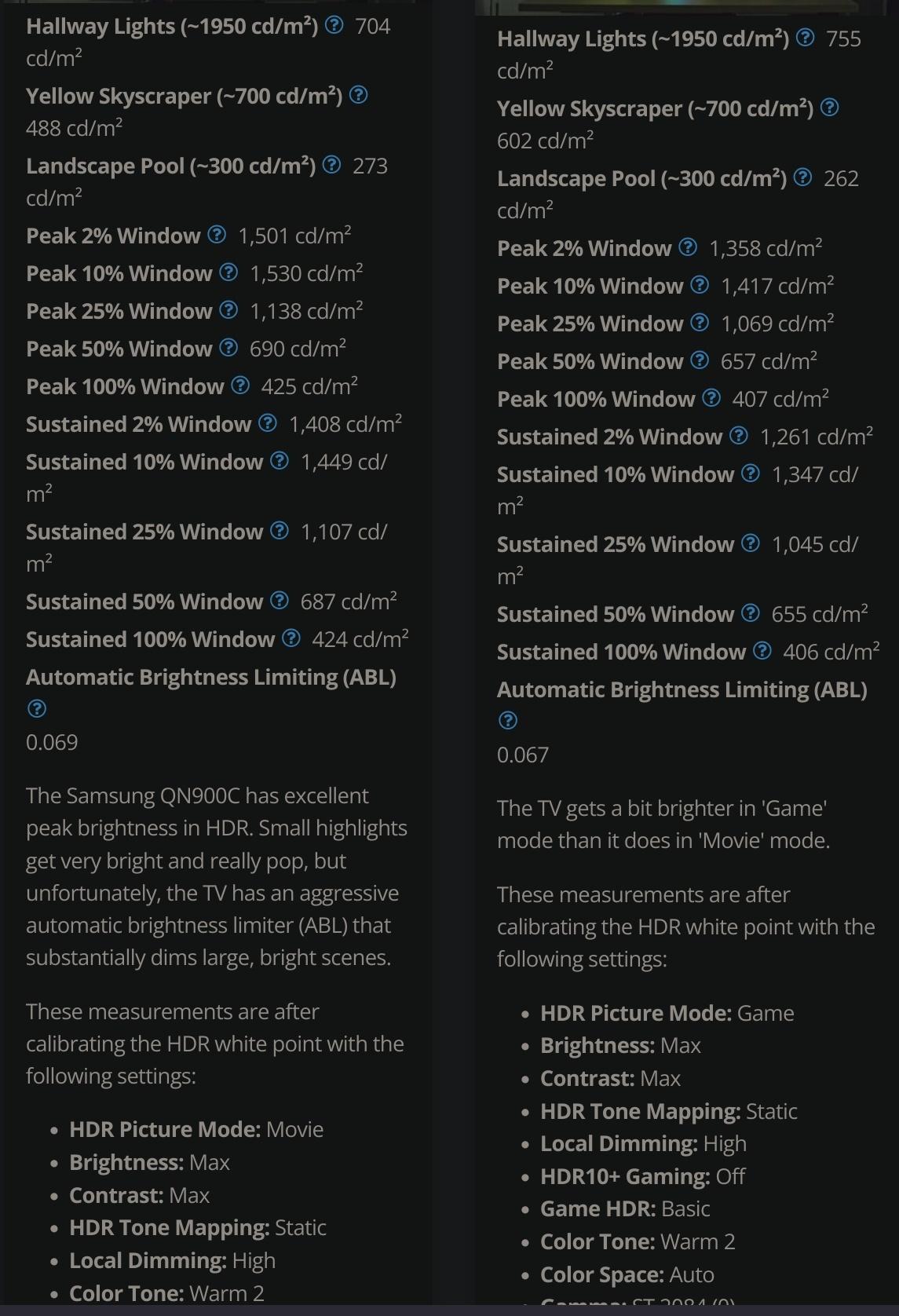

This is my thougths as well as mentioned above, and the reason why I think that as a PC monitor, the difference to the QN900C might not be all that big unless the actual panel is changed or the OCB had real updates to support 240 hz if that is not fake.

For various reasons, I guess quite few people would actually considering either as a PC monitor so one of us might have to bite the bullet here and get one to test ourselves

Someone in the avsforum thread brought one home last night. He said he's setting it up tonight. It's an 85" one no less lol.

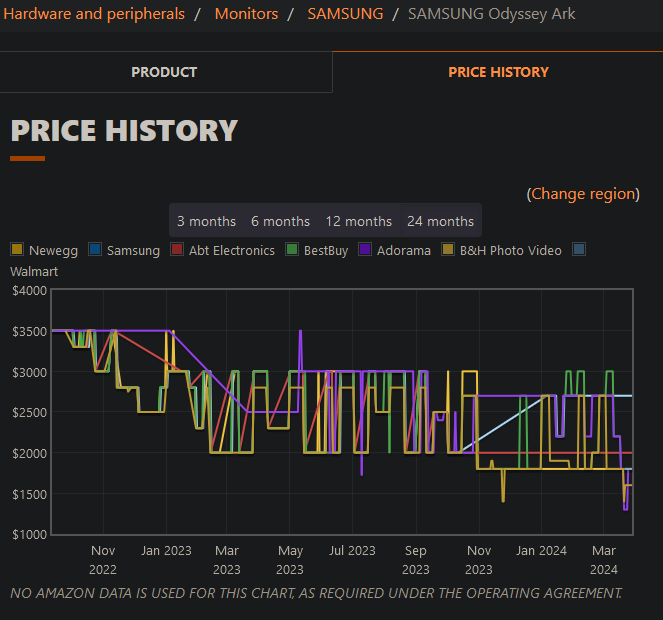

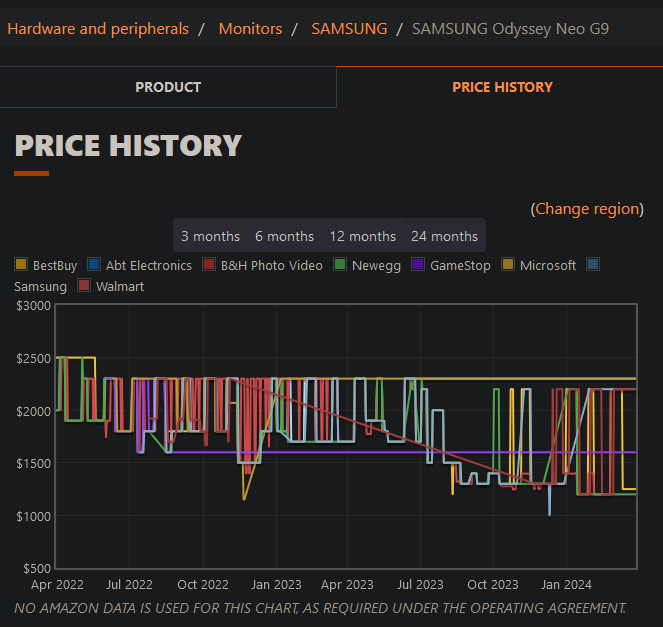

Personally I'm waiting out the big samsung price drops that happen even at the 4month period, let alone 6 - 8 months out. They really charge a massive early adopter fee on stuff like this. Very curious of people's experience with them in the meantime though, and looking forward to a detailed RTings review among others.

Samsung price history talk:

I'm eagerly waiting on some more detailed reviews where they at least put it through it's paces on a powerful pc gaming rig, but I'm in no hurry at that price tag, plus the 5000 series gpu won't be until 2025.

Since they always ask a bigger early adopter price, it's worth waiting it out, kind of like buying a car at the end of the model year, b/c by then they'll have dropped a lot. It's usually not even that long for samsung to drop price. Plus some qualify for samsung discount on top of that (though that removes the ability to add a best buy warranty by buying from them, even though you can pick up the samsung purchase at best buy ironically).

https://pangoly.com/en/browse/monitor/samsung

. .

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)