Enhanced Interrogator

[H]ard|Gawd

- Joined

- Mar 23, 2013

- Messages

- 1,437

Sad to hear you're completely getting out JBL, but I'm glad you gave us a lot of good posts and insights over the years.

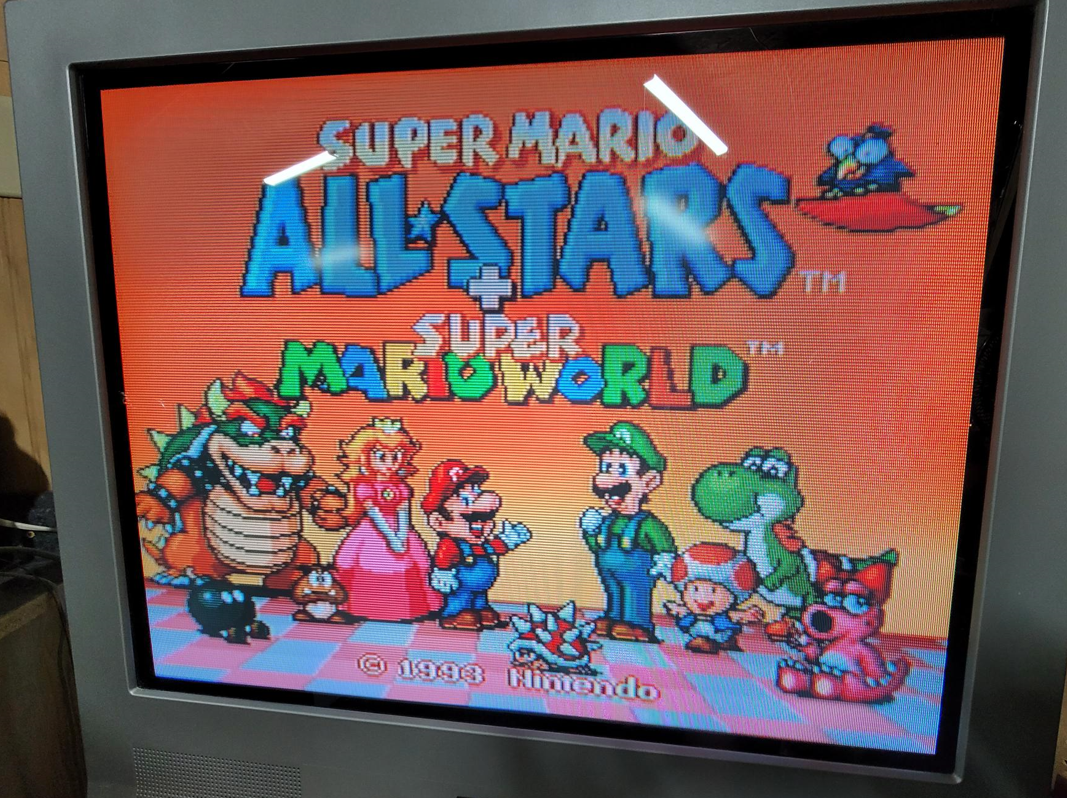

I gotta say thought if I had to get rid of all my tubes and only keep one, it would definitely be a 15kHz set, whether a PVM or even a plain old TV. I would miss my PC monitors but life would go on. But it would be tough to not be able to play Mario Bros 3 or Super Metroid on proper 240p CRT.

I gotta say thought if I had to get rid of all my tubes and only keep one, it would definitely be a 15kHz set, whether a PVM or even a plain old TV. I would miss my PC monitors but life would go on. But it would be tough to not be able to play Mario Bros 3 or Super Metroid on proper 240p CRT.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)