Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,588

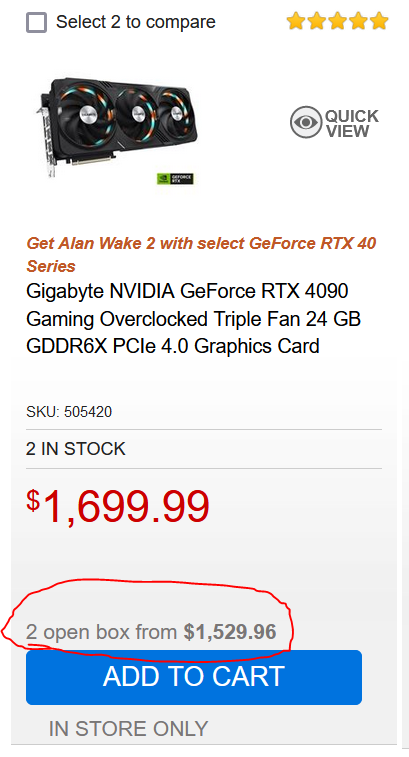

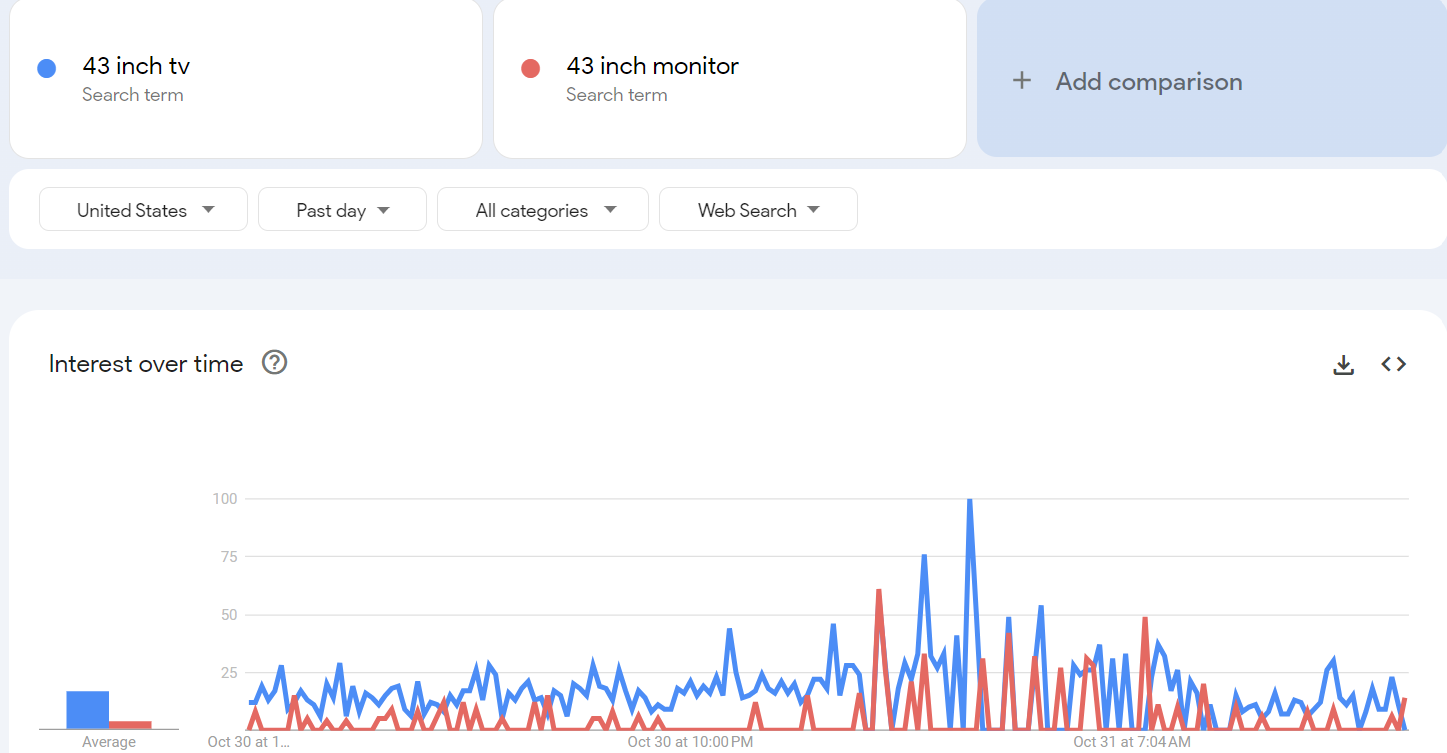

I still don't understand this. I would have thought with the China ban on the top-tier GPU's the prices should have dropped, unless it's because Chinese buyers are circumventing the export ban and buying from exterior markets en-masse.You'll be spending upwards of $1800-$2100 for a 4090 at this point because of external factors.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)